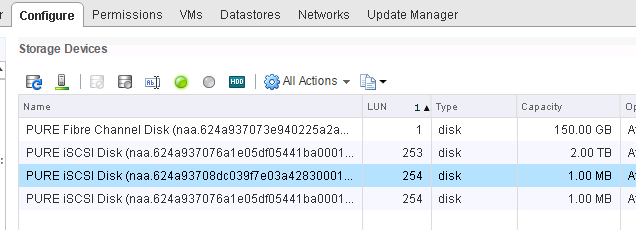

A customer pinged me the other day and said they could not see a volume on their ESXi host. Running ESXi version 6.5. All of the normal stuff checked out, but the volume was nowhere to be seen. What gives? Well it turned out to be the LUN ID was over 255 and ESXi couldn’t see it. Let me explain.

The TLDR is ESXi does not support LUN IDs above 255 for your average device.

UPDATE (8/15/2017) I have been meaning to update this post for awhile. Here are the rules:

ESXi 6.5 does support LUN ID higher than 255, but only if those addresses are configured using peripheral LUN addressing. If your array uses flat addressing, it will not work (which is common for higher level LUN IDs).

ESXi 6.7 does now support flat LUN addressing so this problem goes away entirely.

See this post for more information on ESXi 6.7 flat support.

*It’s not actually aliens, it is perfectly normal SCSI you silly man.

So first, the problem. I will get to a documentation discrepancy in a bit.

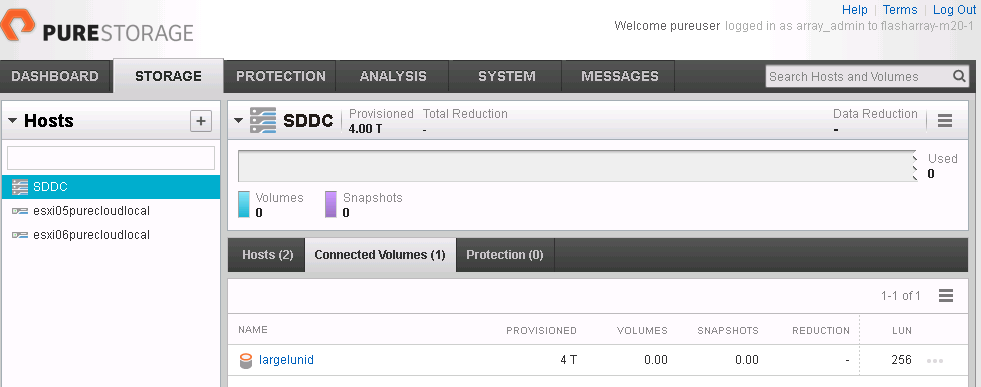

So let’s take the situation where I create a volume and attach it to a host as LUN ID 256.

If I rescan my host my 4 TB volume with LUN ID 256 is no where to be seen.

So why? Well we need to understand LUN addressing first.

LUN Addressing 101

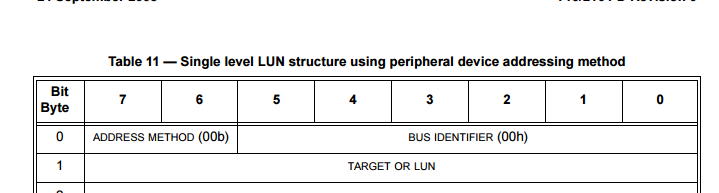

There are a few ways to address LUN IDs–I won’t go into all of them though. The traditional, and most commonly supported method is through peripheral addressing. This allows for one full byte to address the LUN ID. In binary, this maximum is eight bits set to 1, so: 11111111 which, in decimal, is our friend 255.

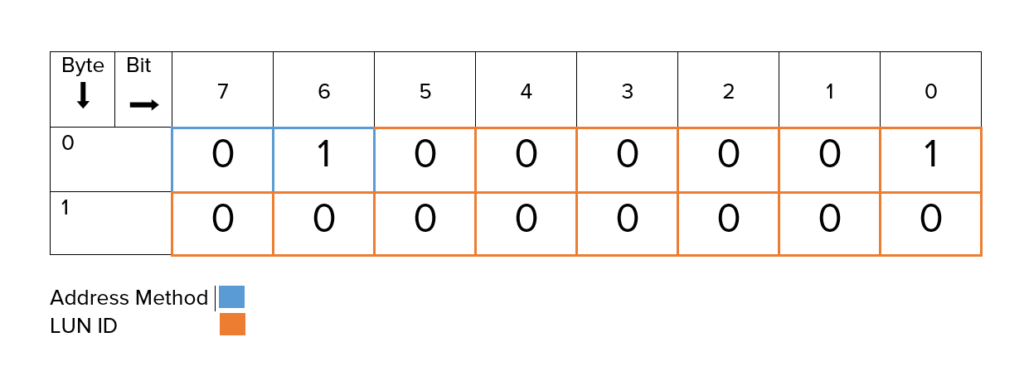

In peripheral, byte 0 is all zeros. Bit 0 through 5 of byte 0 are for the bus identifier and bit 6 and 7 of that byte are for the address method. Address method of 00 (two bits) mean peripheral addressing.

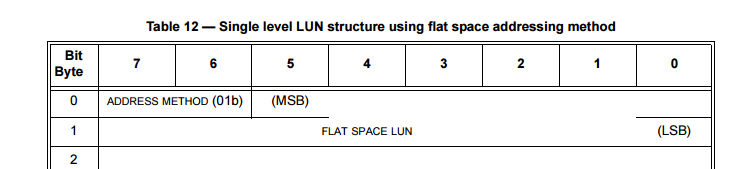

So peripheral addressing works for most situations. But if I want to use LUN ID 256 or higher (meaning I want to present more than 256 volumes to a given host from a given array), this will not work. This is where flat addressing comes in.

Flat addressing gives an additional 6 bits for the LUN ID. So we now can have a LUN ID of up to 11111111111111, which in decimal is 16383.

Flat addressing is indicated by changing bit 6 in byte 0 from 0 to 1.

In either addressing method, the rest of the 64-bit header is all zeroes in general. The first two bytes tell the tale.

Examples

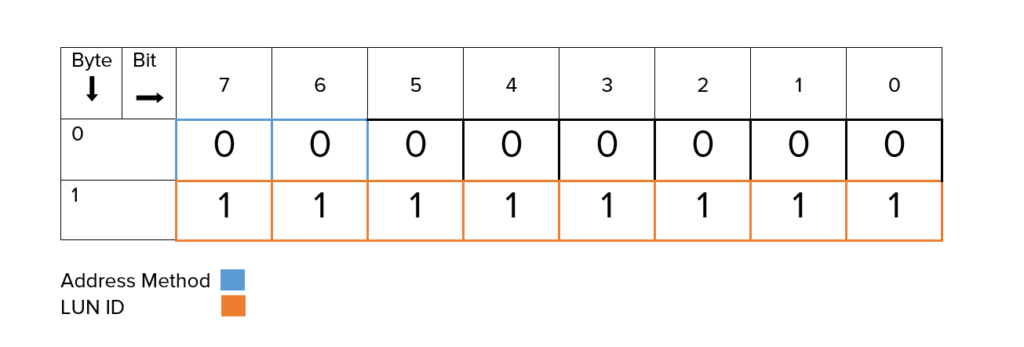

So let’s take a look at this. If I am using peripheral addressing and have LUN 255, my first two bytes (16 bits) look like this:

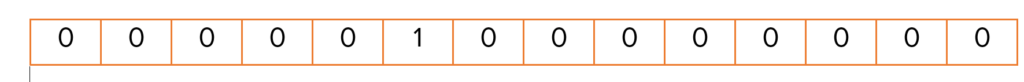

So my host sees this as 255. Great. Now let’s use 256 as our LUN ID. This will kick in flat addressing and change bit 6 in byte 0 to 1. In this method, the remaining 14 bits now describe the LUN number–providing 6 more bits than peripheral did. So for LUN ID 256, the LUN ID would be 100000000. So our header looks like this:

For a host that understands Flat Addressing, they will note the bit change to indicate flat addressing, and will read this as 256.

Some hosts, like at least certain flavors of Linux as far as I can tell will misinterpret this. They are used to byte 0 being all zeroes, and don’t understand the meaning of the change of bit 6 from 0 to 1 (to indicate the change from peripheral to flat). So they actually report this volume as LUN ID 16640. Why? Because they don’t read (or rather interpret) it right.

So for LUN 256 on the array, it reads this during the SCSI inquiry:

And it is supposed to separate out these bits (logically) like so:

- First the address method (bit 6 and 7 in byte 0):

2. Then the actual LUN ID (comprised of bits 0-5 in byte 0 and all of byte 1):

But instead it sees it like this:

As one big number. Which if you convert from binary to decimal it is 16640 (16384 + 256)

Which is why you might see a device with LUN ID 256 (as seen on the array) this way in Linux:

myhost:~ # lsscsi |grep "4:0:0" [4:0:0:255] disk PURE FlashArray 490 /dev/sda [4:0:0:16640]disk PURE FlashArray 490

LUN Addressing and ESXi

So first a few things. The vSphere maximum guide is your friend. But in this case they might confuse you a bit.

https://www.vmware.com/pdf/vsphere5/r55/vsphere-55-configuration-maximums.pdf

https://www.vmware.com/pdf/vsphere6/r60/vsphere-60-configuration-maximums.pdf

https://www.vmware.com/pdf/vsphere6/r65/vsphere-65-configuration-maximums.pdf

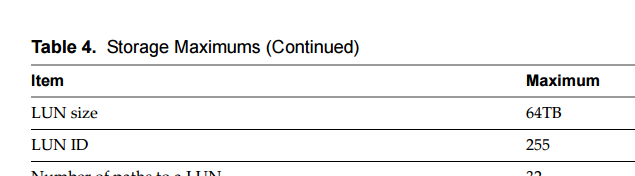

So if we look at vSphere 5.5 and we look at LUN ID limit we see 255.

So this seems to indicate only peripheral addressing. Okay cool. I will keep my LUN IDs under 256.

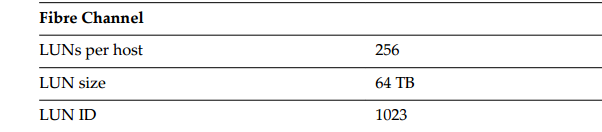

But in 6.0 it goes up to 1023:

Interesting! They must have ventured into flat addressing. 1,023 is kind of a weird limit if you look back at what I spoke about, but maybe that’s just what they tested for.

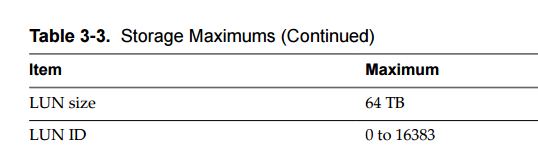

16383! Now that is a familiar number! So why can’t we see my device in ESXi 6.5?

Well for one thing I asked VMware. They do not currently support flat addressing. There ya go. So for standard devices, it must be 255 or lower.

Even though ESXi 6.5 expanded support for 512 devices per host, to get to this, you need more than one target (array).

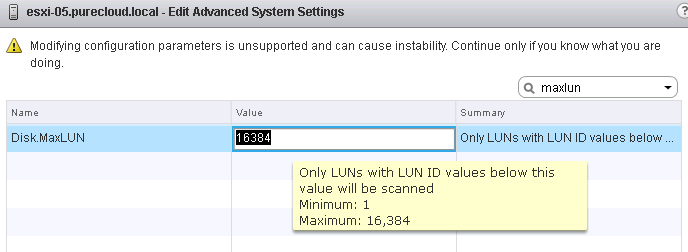

So what about the setting DiskMaxLun?

And of course the maximum guides?

Well if you note that the number went up in 6.0. Then again in 6.5. What also changed in 6.0 and improved in 6.5? VVols!

This LUN ID increase is for VVols, which uses a sub-lun addressing model which is different than what I described above. So this is a bit misleading in the documents, should probably be a separate line item.

So, in short, keep your LUN IDs less than 256.

very informative as always.

Thanks Sergey! Hope all is well!

Nicely explained article, Thanx Cody

Great article, very helpful. Thank you very much.

Great explanation!