So in the previous post, I wrote about deploying a Tanzu Kubernetes Grid management cluster.

VMware defines this as:

A management cluster is the first element that you deploy when you create a Tanzu Kubernetes Grid instance. The management cluster is a Kubernetes cluster that performs the role of the primary management and operational center for the Tanzu Kubernetes Grid instance. This is where Cluster API runs to create the Tanzu Kubernetes clusters in which your application workloads run, and where you configure the shared and in-cluster services that the clusters use.

https://docs.vmware.com/en/VMware-Tanzu-Kubernetes-Grid/1.2/vmware-tanzu-kubernetes-grid-12/GUID-tkg-concepts.html

In other words this is the cluster that manages them all. This is your entry point to the rest. You can deploy applications in it, but not usually the right move. Generally you want to deploy clusters that are specifically devoted to running workloads.

- Tanzu Kubernetes 1.2 Part 1: Deploying a Tanzu Kubernetes Management Cluster

- Tanzu Kubernetes 1.2 Part 2: Deploying a Tanzu Kubernetes Guest Cluster

- Tanzu Kubernetes 1.2 Part 3: Authenticating Tanzu Kubernetes Guest Clusters with Kubectl

These clusters are called Tanzu Kubernetes Cluster. Which VMware defines as:

After you have deployed a management cluster, you use the Tanzu Kubernetes Grid CLI to deploy CNCF conformant Kubernetes clusters and manage their lifecycle. These clusters, known as Tanzu Kubernetes clusters, are the clusters that handle your application workloads, that you manage through the management cluster. Tanzu Kubernetes clusters can run different versions of Kubernetes, depending on the needs of the applications they run. You can manage the entire lifecycle of Tanzu Kubernetes clusters by using the Tanzu Kubernetes Grid CLI. Tanzu Kubernetes clusters implement Antrea for pod-to-pod networking by default.

https://docs.vmware.com/en/VMware-Tanzu-Kubernetes-Grid/1.2/vmware-tanzu-kubernetes-grid-12/GUID-tkg-concepts.html

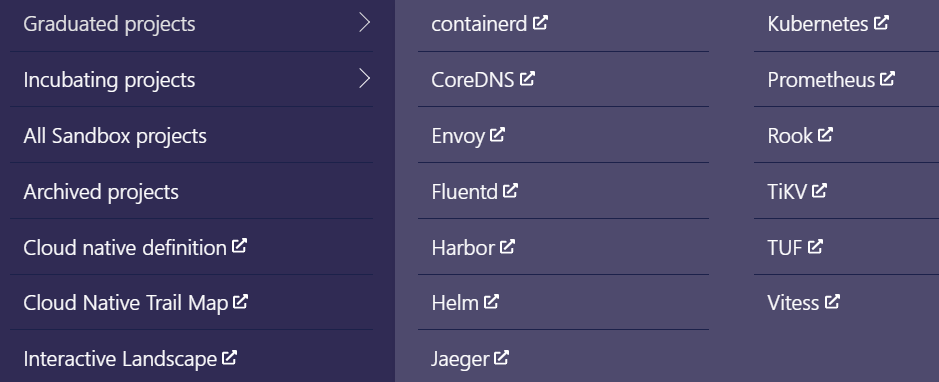

Two terms in there worth definining. CNCF. The Cloud Native Computing Foundation. The CNCF does quite a bit–mostly focused on building and managing open source efforts concerning cloud native infrastructure. These efforts are defined in projects, from sandbox, to incubating, to graduated. Indicating the maturity level of the project and what kind of customers are and should be adopting those tools in production. This concept is discussed in detail in a book called Crossing the Chasm.

Looking at the current graduated projects, you will see a lot of names that will become familiar as you dig into Kubernetes, including Kubernetes itself.

The other word of note there is Antrea. This is an open source networking and security initiative by VMware to control and manage the internal networking and communication within and between the TKG/TKC nodes.

Namespaces

Within Kubernetes, there is a concept of namespaces. Namespaces are a way to of course provide guardrails against name conflict, but also ways to limit resource allocations. When using vSphere with Tanzu or VCF with Tanzu, this becomes more sophisticated in how it interacts with vSphere resources, but that’s a topic for another time.

For now it is about segregating resources and setting limits. The official Kubernetes documentation straight up encourages you to not bother with namespaces unless you have a reason to. So um yeah you don’t need to tell me twice to keep things simple. But in the spirit of scientific discovery, let’s look into it a bit more.

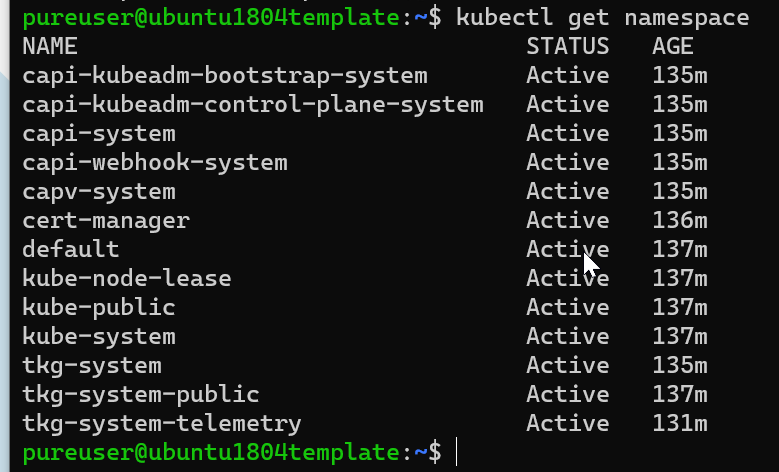

Prior to deploying any Tanzu Kubernetes clusters and after of course deploying the TKG management cluster, there will be a few namespaces:

kubectl get namespace

The kube- are built in Kubernetes namespaces for instance, and the tkg ones are well automated into the build of TKG. The “default” namespace is where new resources deployed by you will go. Unless you specify a different one.

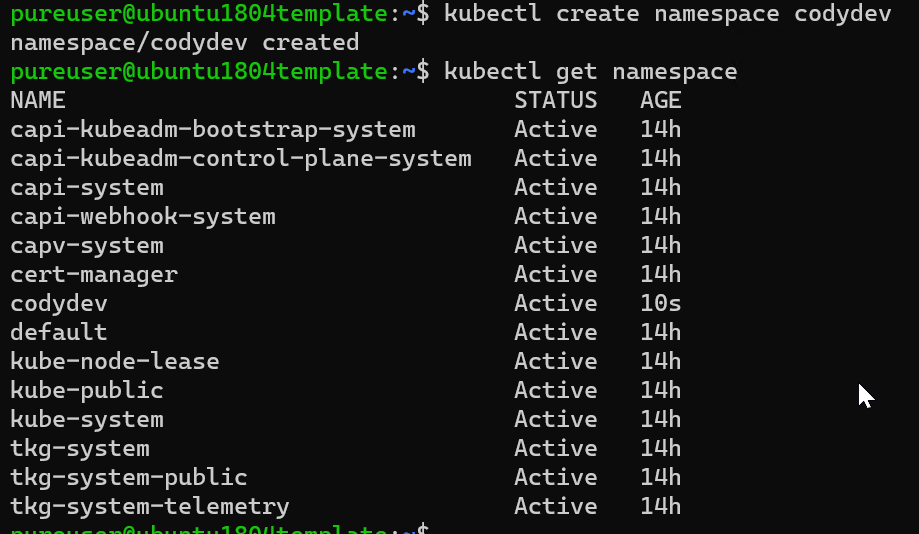

We can create a new one:

You can use a YAML file, but it is simpler just to use the following command:

kubectl create namespace <desired name>

https://docs.vmware.com/en/VMware-Tanzu-Kubernetes-Grid/1.2/vmware-tanzu-kubernetes-grid-12/GUID-mgmt-clusters-create-namespaces.html

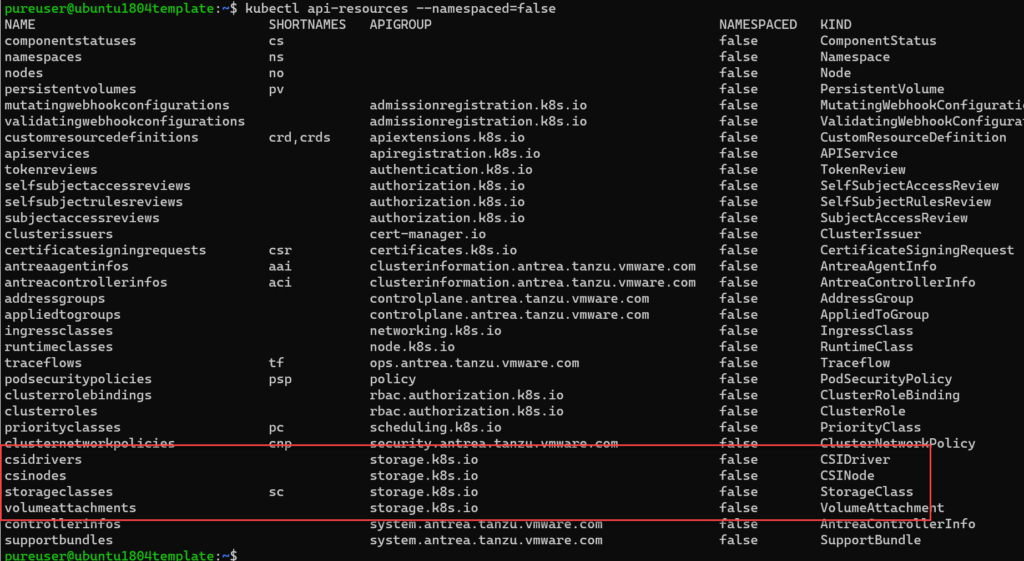

Most things are namespaced, but some are not:

Including storage-related objects within CSI, which will be important in a following post. So let’s deploy a new Tanzu Cluster into the default namespace first.

Deploy New Cluster under Default Namespace

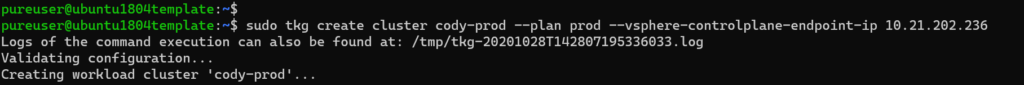

To deploy a new cluster, the command is:

sudo tkg create cluster cody-prod --plan prod --vsphere-controlplane-endpoint-ip 10.21.202.236

Replace cody-prod with your desired cluster name and specify a useable IP not in the DHCP range.

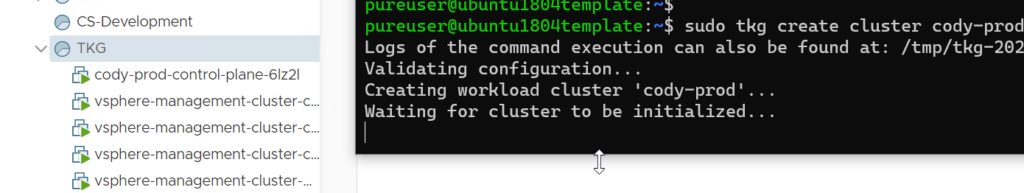

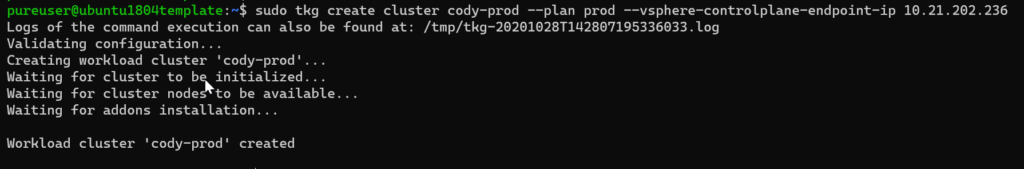

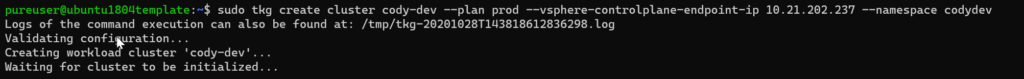

It doesn’t take long to deploy the resources and only a minute or so for the whole set:

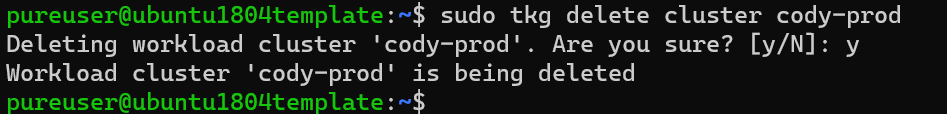

If you forgot sudo, the process will fail towards the end. Probably fixable, but it is just as easy to delete the cluster and then retry with sudo.

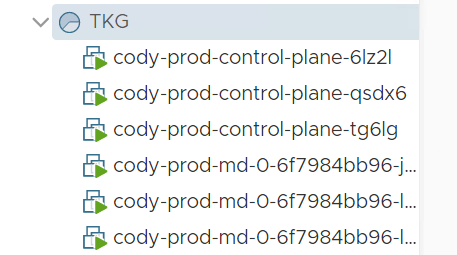

After a few minutes we see my 6 VMs, three control plane nodes and three worker nodes.

Voila:

Deploy New Cluster under Specific Namespace

Okay let’s repeat, but choose the codydev namespace.

New name. New IP. Add in the namespace parameter:

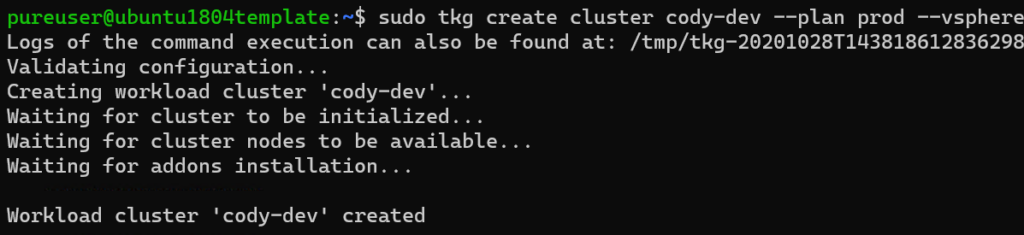

sudo tkg create cluster cody-dev --plan prod --vsphere-controlplane-endpoint-ip 10.21.202.237 --namespace codydev

And after a few minutes:

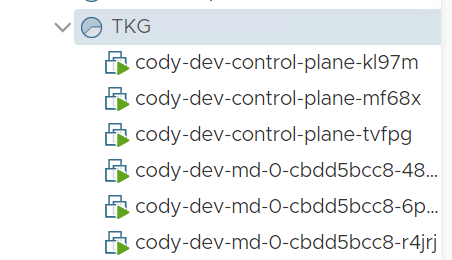

The nodes are all there:

Examine the Clusters

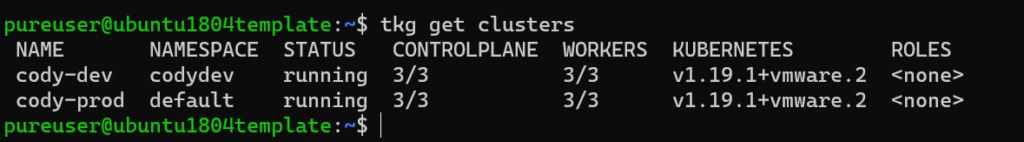

We can quickly view both of our clusters with the tkg get clusters command:

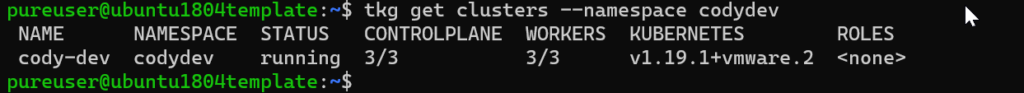

Or query just the one namespace:

Great. In the next post let’s start digging into the storage side.

2 Replies to “Tanzu Kubernetes 1.2 Part 2: Deploying a Tanzu Kubernetes Guest Cluster”