Hey there! This week we announced the upcoming release of our latest operating environment for the FlashArray: Purity 6.0. There are quite a few new features, details of which I will get into in subsequent posts, but I wanted to focus on one related topic for now. Replication. We have had array based replication (in many forms) for years now, in Purity 6 we introduced a new offering called ActiveDR.

ActiveDR at a high level is a near-zero RPO replication solution. When the data gets written, we send it to the second array as fast as we can–there is no waiting for some set interval. This is not a fundamentally new concept. Asynchronous replication has been around for a long time and in fact we already support a version of asynchronous. What is DIFFERENT about ActiveDR, is how much thought has gone into the design to ensure simplicity while taking advantage of how the FlashArray is built. A LOT of thought went into the design–lessons learned from our own replication solutions and features and of course lessons from history around what people have found traditionally painful with asynchronous replication. But importantly–ActiveDR isn’t just about replicating your new writes–but also snapshots, protection schedules, volume configurations, and more. It protects your protection! More on that in the 2nd part.

This will be a three-part series:

- Part I: Replication Fundamentals

- Part II: FlashArray Replication Options

- Part III: VMware Replication Integration

In this post, I will dig into what the FlashArray offers from a replication perspective.

In the previous section, I wrote about the different forms of replication and some glossary items:

- RPO vs RTO (data RTO, application RTO)

- Active/Active and Active/Passive

- Asynchronous and Synchronous

- Continuous and Periodic

Wonderful. We now know all of the things.

But what about the Pure Storage FlashArray? So the FlashArray offers three types of replication:

- Protection Groups

- ActiveCluster

- ActiveDR

Yes, we might one day give protection groups a better name, but for now it is what it is.

Protection Groups

In Purity 4.0 the FlashArray introduced protection groups–protection groups were meant to assign policies of, well, protection to a volume or set of volumes. This included:

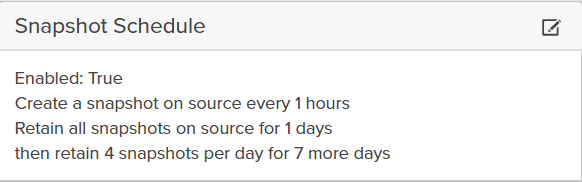

A local snapshot schedule (how frequently to take snapshots, how long should they be kept and a longer term policy of retention).

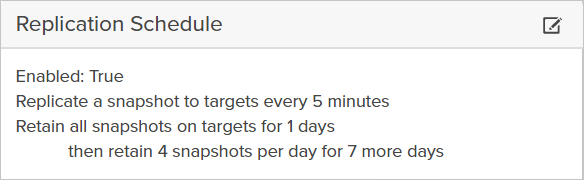

A remote replication schedule (how frequently to replicate, how long should they be kept on the target and a longer term policy of retention).

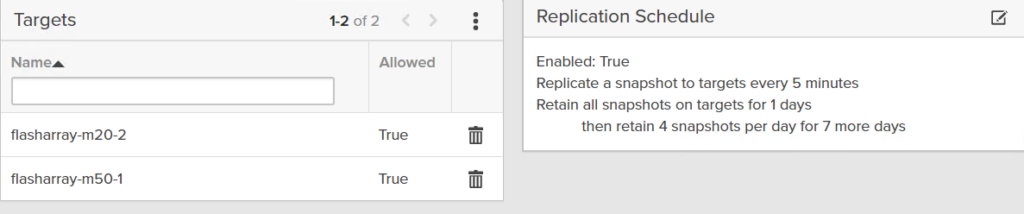

Protection groups work by the user defining one or more targets in the protection group (I will talk about what these targets can be in a moment).

In the case above, all volumes in this protection group will be snapshotted every 5 minutes. Those snapshots will then be sent as a group to the target–this group is called a protection group snapshot (a collection of snapshots created at the same time). These protection group snapshots are write-consistent across the volume snapshots in them.

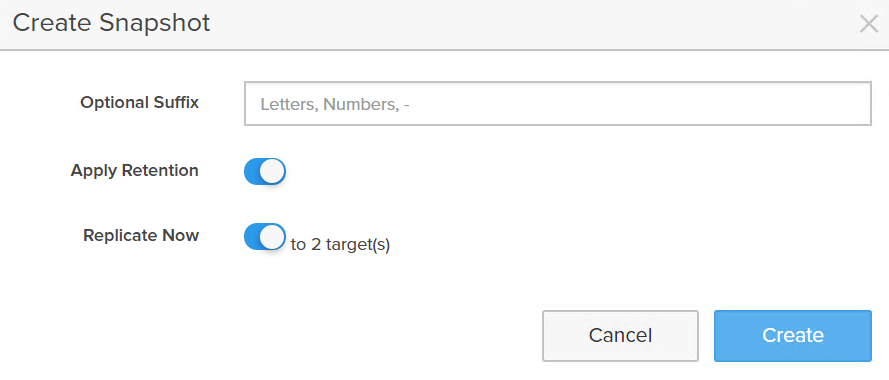

You can also replicate on-demand:

Choosing to assign the configured retention from the policy or create one that lasts until you specifically delete it.

Protection Group Targets

So what can those targets be? Those targets in a protection group can be one of a few things:

- FlashArrays. You can add one or more FlashArrays to a protection group so that the volumes in the group are protected to one or more FlashArray simultaneously

- Cloud Block Store. CBS is the FlashArray software running in AWS. You can replicate directly to a CBS instance in AWS (and later this year CBS will be available in Azure too) and then actively use that data in the public cloud via iSCSI to compute.

- NFS target. You can provision an NFS share somewhere else (doesn’t have to be on Pure at all) and the protection group will send the snapshots and store them on that NFS target. It uses the same policies as FlashArray-based protection groups (replication interval, retention).

- Amazon S3, Azure Blob, Google Cloud Storage. If you want to store copies of your data in the public cloud you can do that as well. We can send and store FlashArray protection group snapshots to three of the popular cloud object stores.

The FlashArray to FlashArray (or FlashArray to CBS) replication is the most efficient option–we preserve all dedupe. For the non-FlashArray targets we only preserve compression.

The caveat of FlashArray to FlashArray is that you need two datacenters–each with a FlashArray. Which is where the other options come in. The non-FlashArray options allow you to use the public cloud as your data bunker or in the case of CBS an active target. Once sent to cloud storage (or NFS), the data can be then rehydrated back to any FlashArray or Cloud Block Store instance. So even if you lose the original FlashArray–the data can be restored from the cloud.

A benefit of sending it to AWS S3 is that you could keep your data in S3 and when you want to actually use the data, spin up a Cloud Block Store instance and hydrate the snapshots to CBS and use them. Though you can also directly replicate to CBS from a FlashArray.

So, should I send to S3 or send directly to CBS? Well the benefit of sending to CBS is that the data is available immediately–making the dev/test use case better. Sending some databases (for instance) to AWS and bringing up and refreshing copies instantly. Or a Kubernetes environment–replicate the Persistent Volumes and use PSO in the public cloud. Though there is an AWS infrastructure cost to running CBS and maybe you don’t always need it there. So sending it to S3 and then spinning up CBS as needed would be cheaper. Though the process will take longer–the data needs to be hydrated from S3 into CBS. So the RTO of FlashArray to S3 to CBS is much higher than FlashArray directly to CBS. Furthermore, you need either a VPN connection or DirectConnect to replicate between a FlashArray and CBS. To send to S3 it can be done over the public internet. So it depends on your use case–there is a lot of flexibility.

So what type of replication is this?

- Asynchronous. Writes are only acknowledged on the source. They are sent to the target some time later.

- Periodic. On a certain schedule it creates a local snapshot and sends it to one or more targets

- Active/Passive. The data exists on the remote targets as snapshots, so by definition the data on the target is not active. You can copy the snapshots to new volumes to use them.

When should I use it?

If you are running software prior to Purity 6.0 this is the async option for you. ActiveDR is 6.0 and later–which is a Purity release available for only FlashArray//m, FlashArray//x and FlashArray//C. If you have limited bandwidth and want to control when replication occurs–protection groups do this well. Send a copy every night at 4 AM when my WAN requirements are low. I want to replicate to many arrays at once–I have requirements to replicate a dataset to more than one array at a time–only protection groups offer this (today). I want to replicate to non-Pure Storage–the object or NFS target support is only available for protection groups. Also this is the most WAN-efficient method as it has the greatest preservation of data reduction over the wire. If you have very limited WAN bandwidth it is likely the preferred option.

ActiveDR

Introduced in Purity 6.0, ActiveDR is the next evolution of our asynchronous replication. I will write a deeper dive blog post soon on ActiveDR so stay tuned for more details. But at a high level, ActiveDR offers near-zero RPO (will typically be measured in seconds). When a write get sent to a FlashArray volume protected by ActiveDR, the write gets forwarded as quickly as possible to the target. It is NOT synchronous–once the write lands on the first array it is acknowledged to the host–before being sent to the 2nd array.

ActiveDR is also not about just protecting your data–it protects your protection–it protects your configurations. In short, you create a pod on one array, then another pod on a second array. You then link the two pods–everything in the source pod gets replicated to the second.

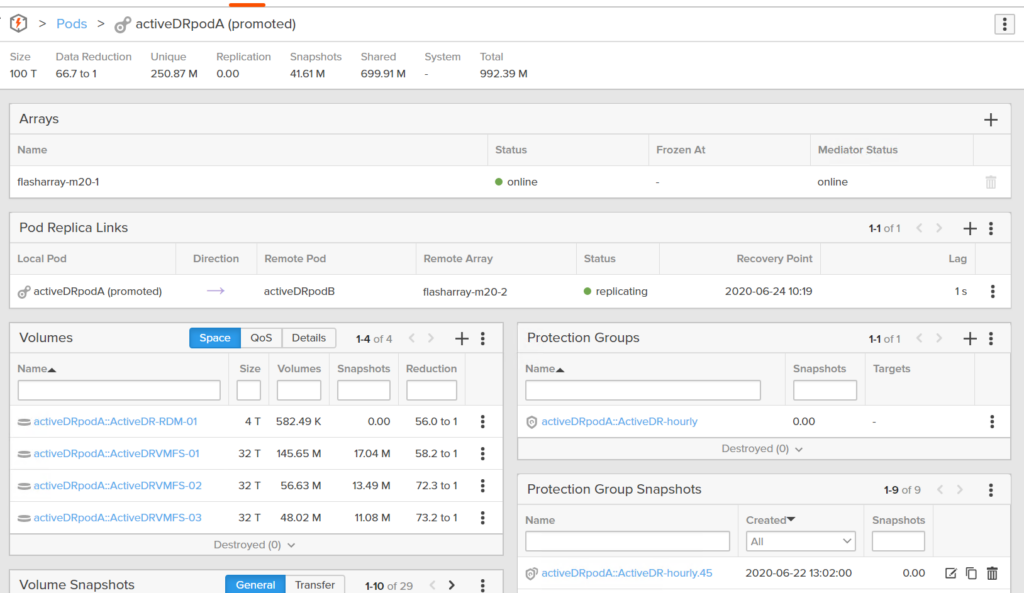

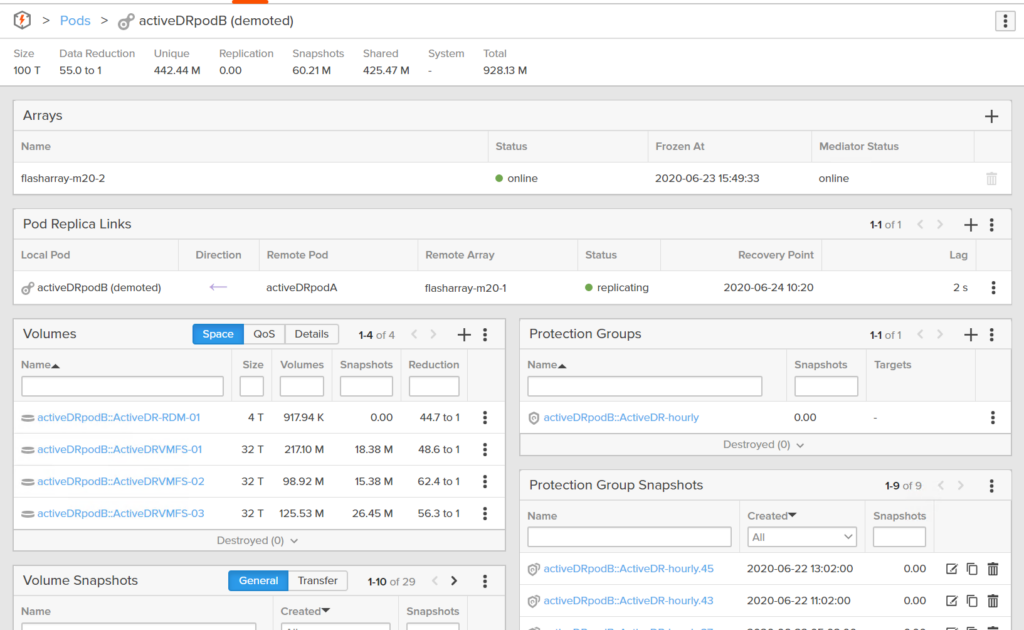

Source pod:

Target pod:

The target pod can be made writable though for recovery or even non-disruptive tests. All of your volumes are sent over (and of course their data), the volume snapshots, and even protection groups and their protection group snapshots.

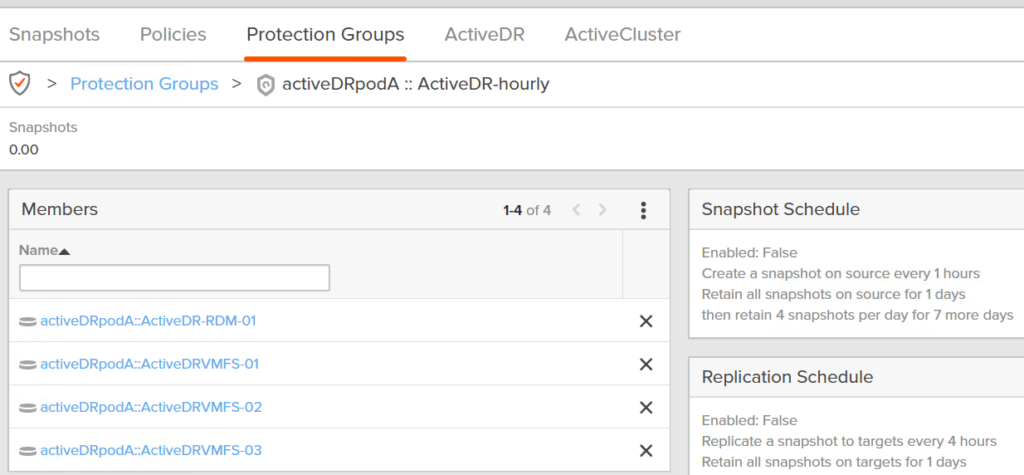

Yes you can create protection groups in an ActiveDR pod–though protection groups in an ActiveDR-enabled pod is only supported for local snapshot policies.

So if you failover, all of your volumes, their features (QoS etc) your point-in-times, are available on the target too. These all are replicated over to the target as quickly as possible.

So what type of replication is this?

- Asynchronous. Writes are only acknowledged on the source. They are sent to the target some time later.

- Continuous. ActiveDR does not wait for some interval to send the data–it sends it pretty much as soon as it can. How fast depends on the available bandwidth of the WAN connection.

- Active/Passive. The sources volumes are only available on the source array. The DATA is available on the 2nd side but they are different volumes with the same data. So it can be recovered to, or it can be tested, but there is a failover process–it is not transparent to a host/application.

When should I use it?

You need very low RPO (seconds). Or you need as close to zero as you can get, but the distance between datacenters is too great and the latency impact of synchronous would affect your applications. Furthermore you want to protect your volumes, their configurations, and their point-in-times over distance. Protection groups are only about protecting data–not everything else.

ActiveCluster

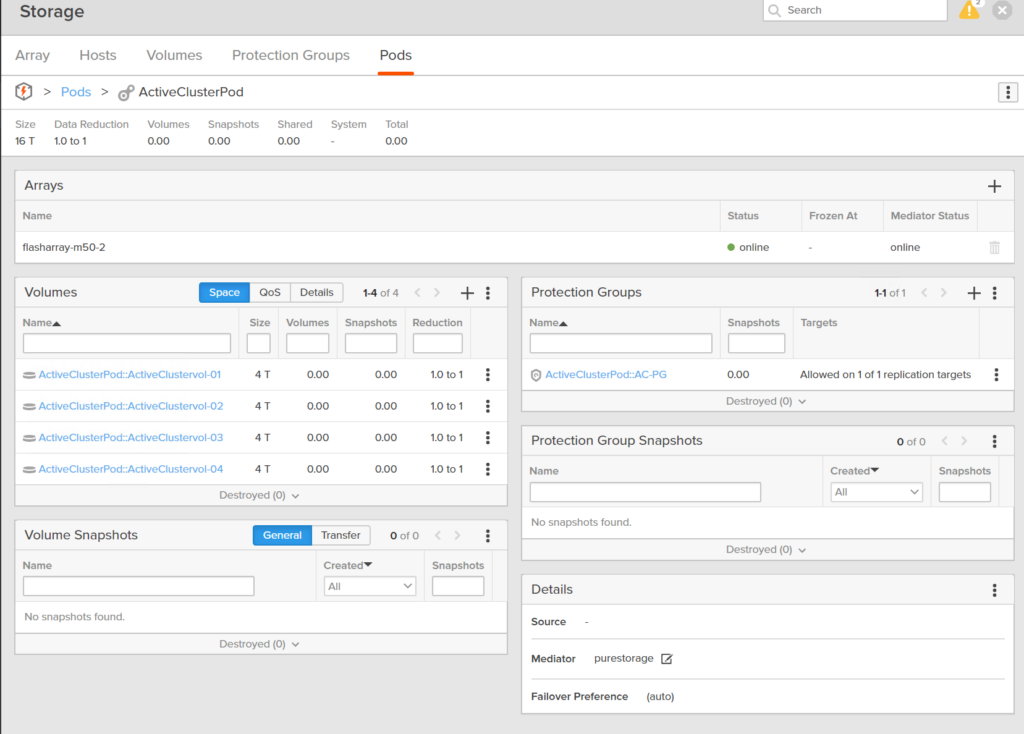

Introduced in Purity 5.0, ActiveCluster is a synchronous replication solution. Similar to ActiveDR-it protects your volumes, their configurations, their snapshots. The main difference is that it doesn’t copy a pod and its contents to a different pod on a different array–but instead it makes that pod available on both arrays at the same time. A volume in the pod is available to be written to, and read from, via two arrays at the same time. You create a snapshot of that volume on array A–that snapshot is available on array B as well immediately.

You take a pod:

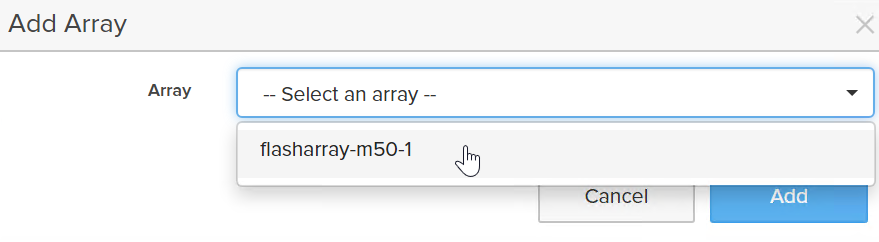

Add an array:

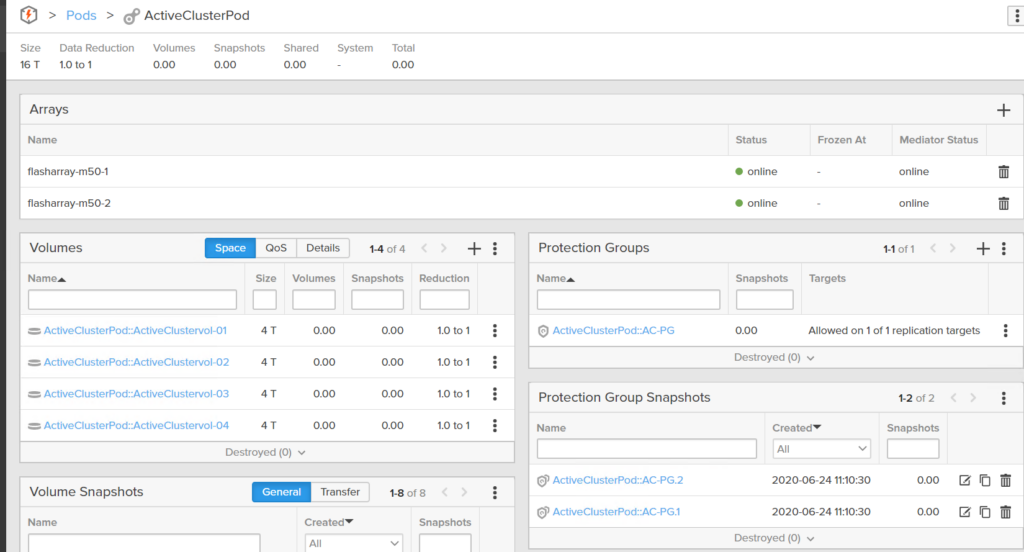

That pod and everything in it is now on both arrays:

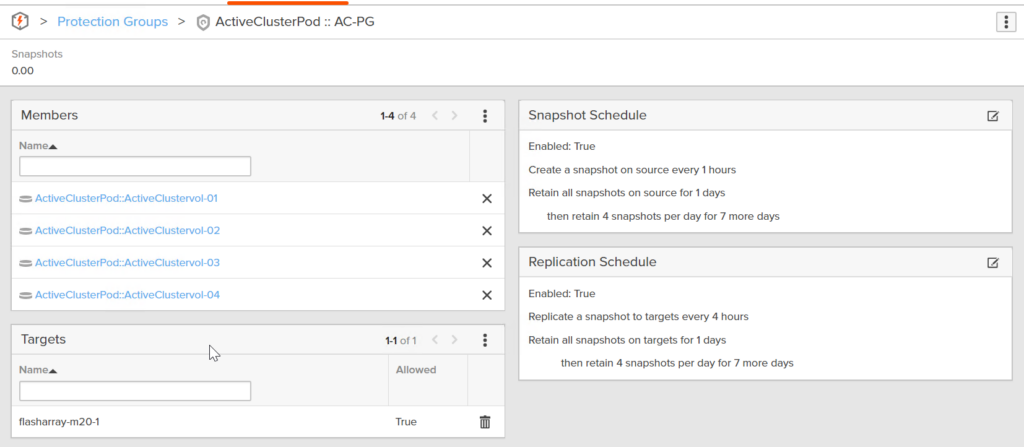

Pods that are protected by ActiveCluster (ActiveDR links pods, ActiveCluster stretches pods) can also have protection groups. But protection groups in ActiveCluster pods can be enabled for protection group based replication:

So this allows volumes to be protected across two arrays, but also be protected over distance to a third array in a periodic fashion.

So what type of replication is this?

- Synchronous. Writes are acknowledged on both arrays before responding to the host. So any completed write is protected on two arrays.

- Continuous. ActiveCluster does not wait for some interval to send the data–it sends it as soon as the writes come in–if ActiveCluster has to wait, the host waits too.

- Active/Active. The volumes are on both arrays at the same time. There is no failover process and the loss of one array can be entirely non-disruptive to the host(s) accessing the storage.

When should I use it?

Well if you need zero RPO. I need all of my data in both sites always. This is where only ActiveCluster can help. ActiveDR is if you don’t absolutely need zero and you also don’t want to take the host latency hit of waiting for the data to replicate. If protection is more important than write performance–than ActiveCluster is the choice. Pure supports up to 11 ms RTT between arrays for ActiveCluster replication. There is no such requirement for protection groups or ActiveDR.

Support Matrix

Can a volume be protected by these features at once?

| AS OF PURITY 6.0 | ActiveDR | ActiveCluster | PGroup FlashArray/CBS target | PGroup Cloud/NFS target | PGroup Local Snapshots |

| ActiveDR | N/A | No | No | No | Yes |

| ActiveCluster | No | N/A | Yes | No | Yes |

| PGroup FlashArray/CBS target | No | Yes | N/A* | Yes | Yes |

| PGroup Cloud/NFS target | No | No | Yes | N/A | Yes |

| PGroup Local Snapshots | Yes | Yes | Yes | Yes | N/A* |

Can’t wait until all the specs/docs on this are out. ActiveDR looks like a nice feature.

The things I wonder about are:

– Can I use ActiveDR if I have multiple FAs at site A and one target FA at site B?

– how does ActiveDR work with SRM?

Yes ActiveDR can fan in or out though array wide. So a set of volumes can only be between a single array for now. But a given array can replicate some volumes to one array and others to a different one. SRM support is in RC status so it will be available soon, but not quite at GA

I actually need to correct myself here–we don’t support fan out/in at GA (didn’t complete testing on that in time). That is coming though. Purity 6 is now officially released!

Fantastic news! You folks just went ahead and made your own native version of “RecoverPoint” (solid product for its time).

Thanks for the detailed post, Cody. We can’t wait to try out ActiveDR.

Agreed! Does look like RecoverPoint equivalent product. If ActiveDR works as it claims, I wouldn’t use product such as Zerto anymore. The caveat is you have to use Pure all the way… I hope ActiveDR can deliver its hype. Combined with SRM support, I can settle on Pure platform for a while.

I added some more details here https://www.codyhosterman.com/2020/07/whats-new-in-purity-6-0-activedr/

Hi Cody, good technical insight as always. Would be good to have a simple slide, one per configuration and possibly even a feature matrix which would help in choosing which solution you might take advantage of as a customer.

Thank you! Good idea–I will relay this to our product team

Does CBS and/or CloudSnap support Safe Mode snapshots?

CBS works with safemode (though it is still Purity 5.3 only so certain safemode features are not there yet). CloudSnap I am not entirely sure of the interop. I am looking into it

Sorry for the delay, but thanks for the info!

If I have a Protection Group with snaps on Array A sending to Array B, Can I create an Active Cluster on Array C sending to Array A without messing with the original Protection Group. The volumes sent from C to A will not be the same what is in the Protection Group