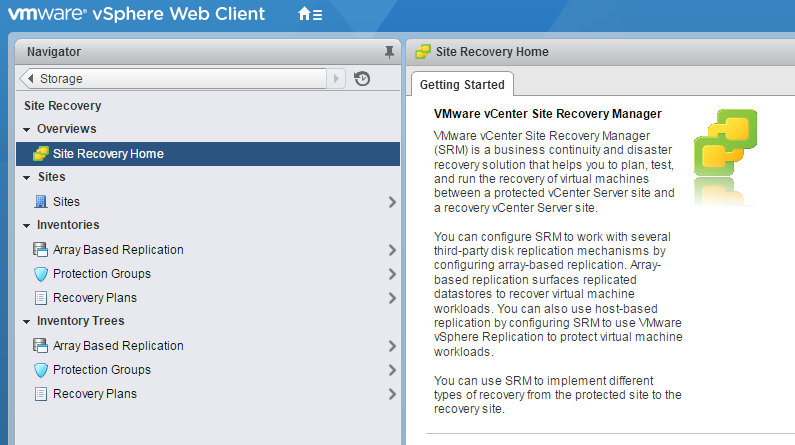

VMware vCenter Site Recovery Manager 6.0 was mostly a compatibility release–getting it to work right with vCenter 6.0 essentially. That being said, there were a few new features (and some nice tweaks in the GUI) included in the release. One of the new features that sparked my interest was SRM and Storage DRS compatibility enhancements.

Ben Meadowcroft a VMware PM who works on amongst other things, SRM, blogged about this new feature here. Find the VMware KB here.

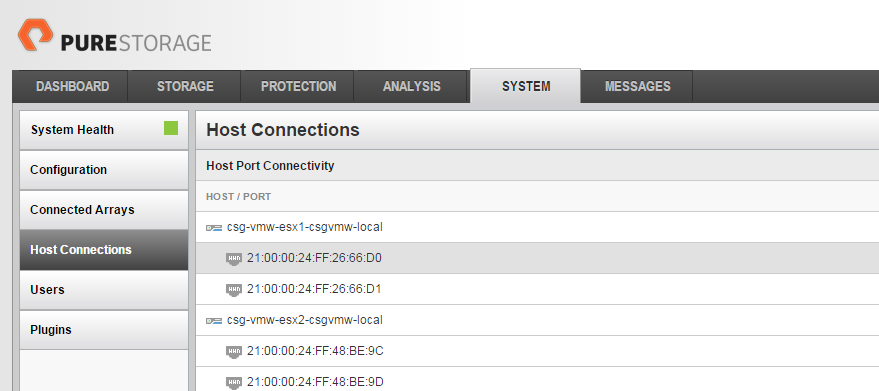

Ben covers most of the history of this in his post so I will skip over that. Let’s take a look though a little closer at this functionality. So to overview there are three tags that SRM introduces to a datastore:

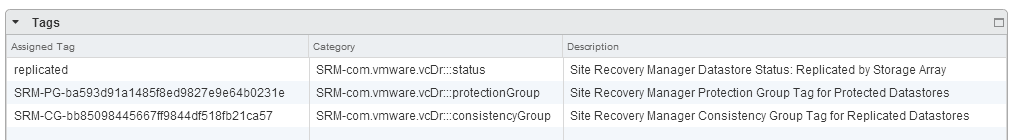

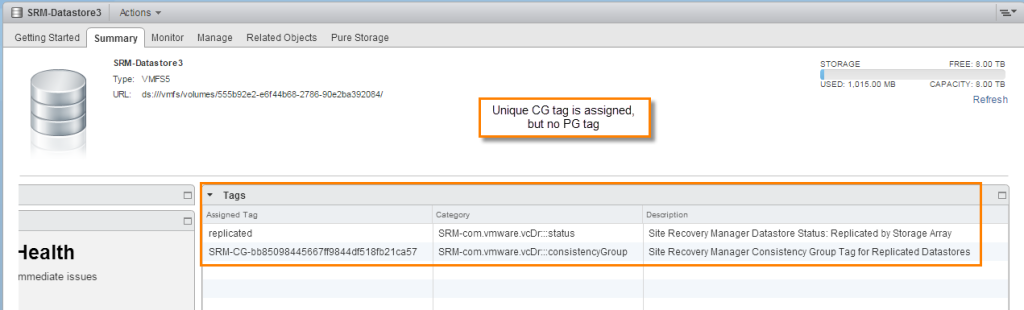

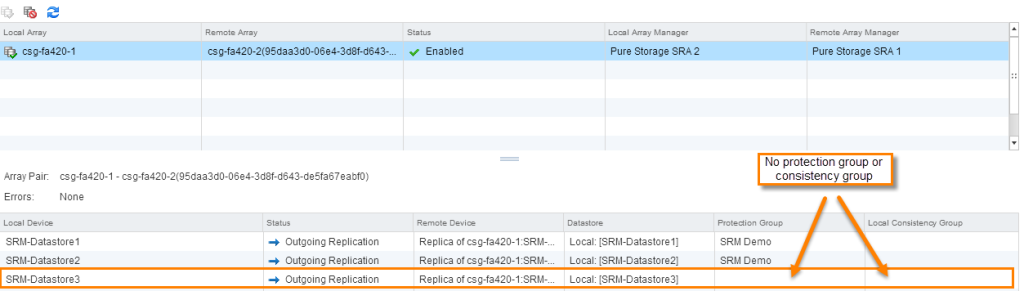

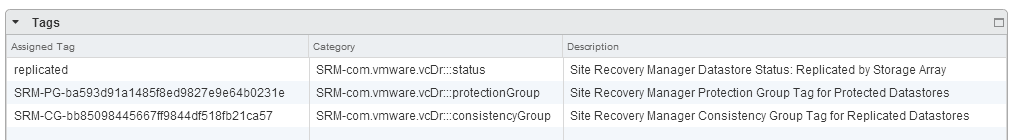

- SRM-com.vmware.vcDr:::status (indicates that the datastore is replicated)

- SRM-com.vmware.vcDr:::consistencyGroup (indicates what CG the datastore belongs to, if any)

- SRM-com.vmware.vcDr:::protectionGroup (indicates what PG the datastore belongs to, if any)

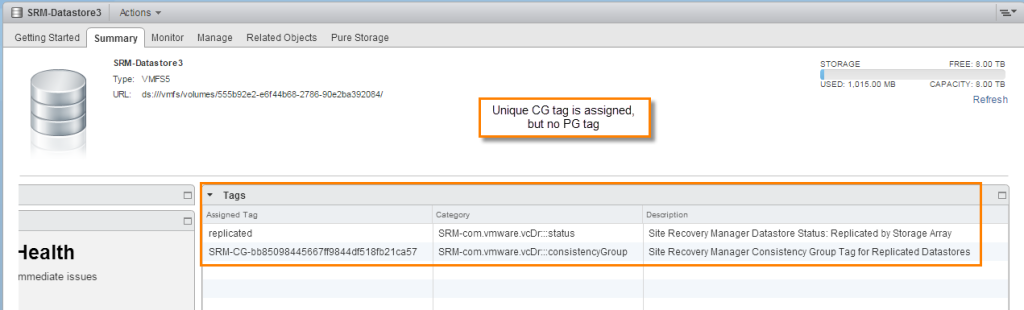

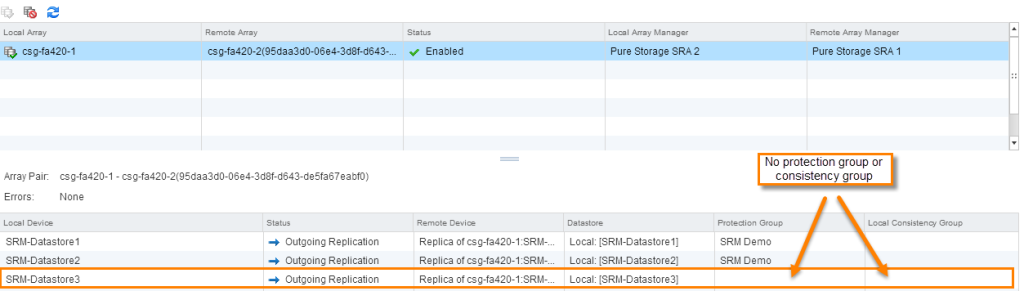

Replication status is assigned as soon as SRM (and it’s respective Storage Replication Adapter) discovers it to be replicated through a Device Discovery operation. Upon this discovery a consistency group tag is also assigned. If the volume is not advertised by the SRA as being in a consistency group a unique one will be created for that volume–basically indicating it is in its own consistency group.

A protection tag is not assigned until the volume is actually added to a protection group. Once the datastore is assigned to a protection group it will receive the tag (remember a volume can only be in one PG and SRM only supports being in one CG so there will always only be one to assign).

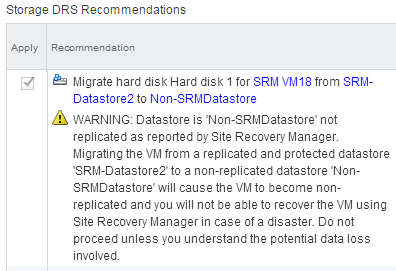

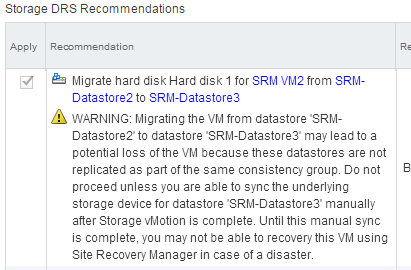

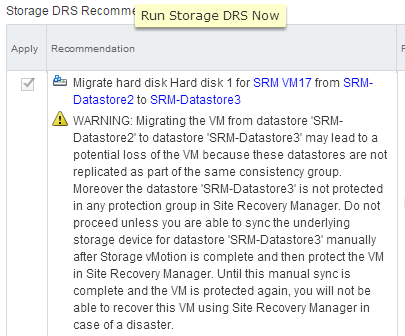

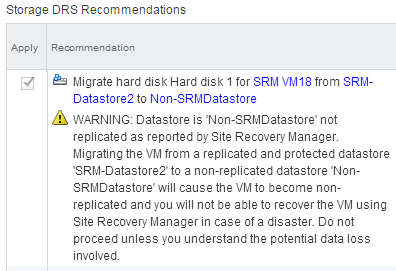

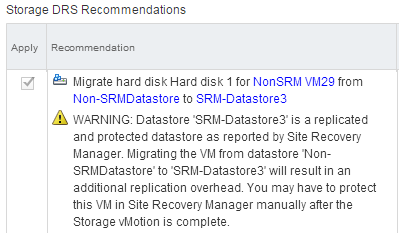

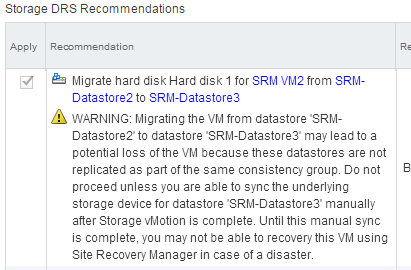

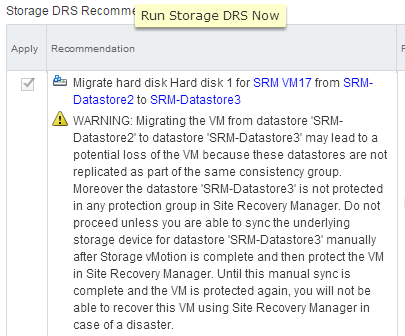

So what do these tags do? Well Storage DRS will note these tags and not make any automatic moves if a Storage vMotion would violate any of them, this means it will not move from one datastore to another if:

1) Source datastore is replicated and target is not

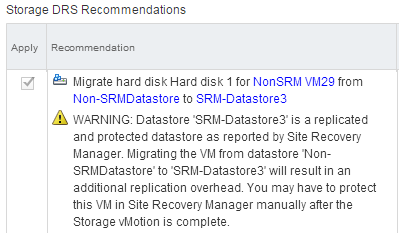

2) Source datastore is NOT replicated and target is

3) Source datastore is in a different consistency group than the target

4) Source datastore is replicated AND in a protection group but target is replicated but NOT in an protection group

Basically Storage DRS will not move a VM from one datastore to another if it deems it to cause a change in the configuration of the protection group or consistency of a virtual machine.

So automatic Storage DRS will never make these moves. It may suggest them if it cannot find a better option, but it will never make a move that will violate these rules. If for some reason you want this to occur you can always override the warning and execute the operation.

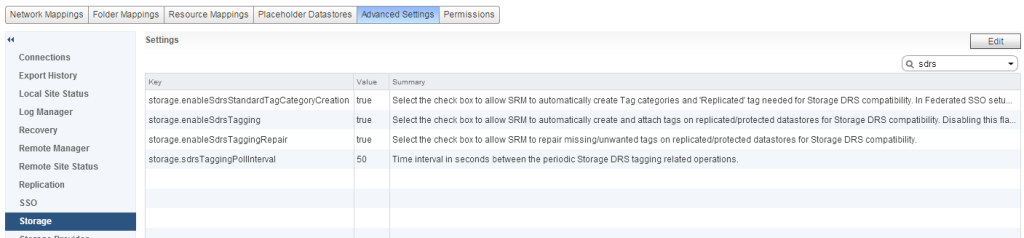

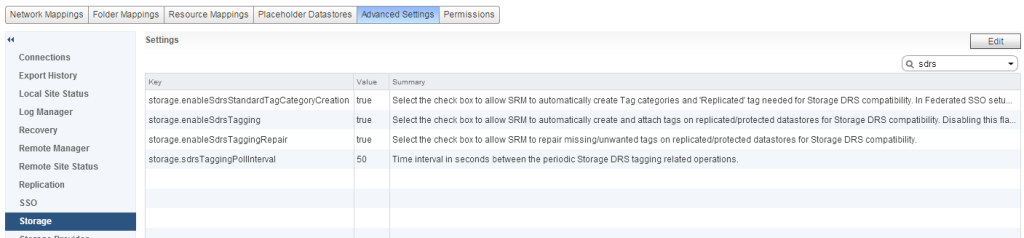

Let’s take a look now at the relevant configurable behavior in SRM.

There are four options:

| Setting Name |

Description and Default Value |

| storage.enableSdrsStandardTagCategoryCreation |

This creates the three tag categories in vCenter for you. |

| storage.enableSdrsTagging |

This actually applies the tags to the datastores when discovered etc. |

| storage.enableSdrsTaggingRepair |

This allows SRM to fix datastore tag when something has changed (PG/CG membership changes for instance). |

| storage.sdrsTaggingPollInterval |

How often SRM checks tags to make sure they are accurate. |

All of these options are enabled by default, well, kinda, the last one is just set to 50 seconds.

So like the table says the enableSdrsStandardTagCategoryCreation option is pretty straight forward. Creates the three categories. You can, of course, create them yourself if you choose to, not sure why you would though with the exception of the reason stated in the option description:

“In Federated SSO setups, this flag should be disabled and the tags and tag categories should be manually created.”

When enableSdrsTagging is enabled, SRM will place the correct tags at the appropriate times. So when a new device is discovered or its protection group membership changes.

The option enableSdrsTaggingRepair is a little more to think about. New tags will still be placed on datastores, replicated/cg tags during device discovery, pg tags upon adding it to a new or different pg. But it will not fix or remove them, if you remove it from a PG or delete the PG, the tag will remain. If you delete the SRM provided tag and replace it with you own, it will not fix it. Though if you add it to a new PG it will remove an old one if it exists and then give it the correct one. But it won’t ever do that unless you make that PG change.

A note about the repair functionality. If you decide to delete a SRM-provided tag and make you own, it will not last long if this feature is enabled. SRM will right things quite quickly (50 seconds or less). So if you want more control over this tagging for SRM-related devices, disabling this is an option. Of course disabling this can easily lead to stale information in the tags, so do so at your own risk.

In general, I think this is a great enhancement. I would like to see more granular control from the SRM side of things (enable/disable CG auto-tagging when a CG doesn’t exist for that device for instance. This also should have a play in non-SRM environments, it’s just a bit more work because you have to do the tagging yourself.

In Part II, I will take a look at how this works with the FlashArray SRA and what’s involved in that.