NVMe. A continued march to rid ourselves of the vestigial SCSI standard. As I have said in the past, SCSI was designed for spinning disk–where performance and density are not friendly to one another. NVMe, however, was built for flash. The FlashArray was built for, well, flash. Shocking, I know.

Putting SCSI in front of flash, at any layer, constricts what performance density can be offered. It isn’t just about latency–but throughput/IOPS per GB. A spinning disk can get larger, but it really doesn’t get faster. Flash performance scales much better with capacity however. So larger flash drives don’t get slower per GB. But this really requires the HW and the SW to take advantage of it. SCSI has bottlenecks–queue limits that are low. NVMe has fantastically larger queues. It opens up the full performance, and specifically performance density of your flash, and in turn, your array. We added NVMe to our NVRAM, then our internal flash to the chassis, then NVMe-oF to our expansion shelves, then NVMe-oF to our front end from the host. The next step is to work with our partners to enable NVMe in their stack. We worked with VMware to release it in ESXi 7.0. More info on all of this in the following posts:

Initially, we offered NVMe-oF via RoCEv2 (RDMA over Converged Ethernet), as of Purity 6.1, we now also support NVMe-oF via Fibre Channel.

There is still some ESXi work to do around NVMe:

- NVMe-oF TCP

- vVols & NVMe

- Replace VM-to-datastore (vSCSI) stack

These projects are in-progress by VMware as mentioned in their VMworld sessions on storage, so there is still some work to be done, but the full transition to NVMe is closer than ever.

Requirements

For now, let’s talk about configuring NVMe-oF/FC on the FlashArray and ESXi 7 (recommended release is 7.0 U1 or later). First off, some requirements:

- vSphere 7.0. Though we do recommend at this point 7.0 Update 1.

- Purity 6.1.x.

- Switched fabric. We don’t support direct connect NVMe-oF/FC. So do your zoning. Zoning is identical to SCSI based zoning. You can do this part at any time in this process–ideally first, as it can help to know if there are connectivity issues early.

- Compatible NVMe-oF FC HBAs. The list is here: https://www.vmware.com/resources/compatibility/search.php?deviceCategory=io&details=1&pFeatures=361&page=1&display_interval=10&sortColumn=Partner&sortOrder=Asc

- FlashArray//X or C with FC ports. For NVMe to be enabled, reach out to support.

Confirm FlashArray NVMe-oF Support

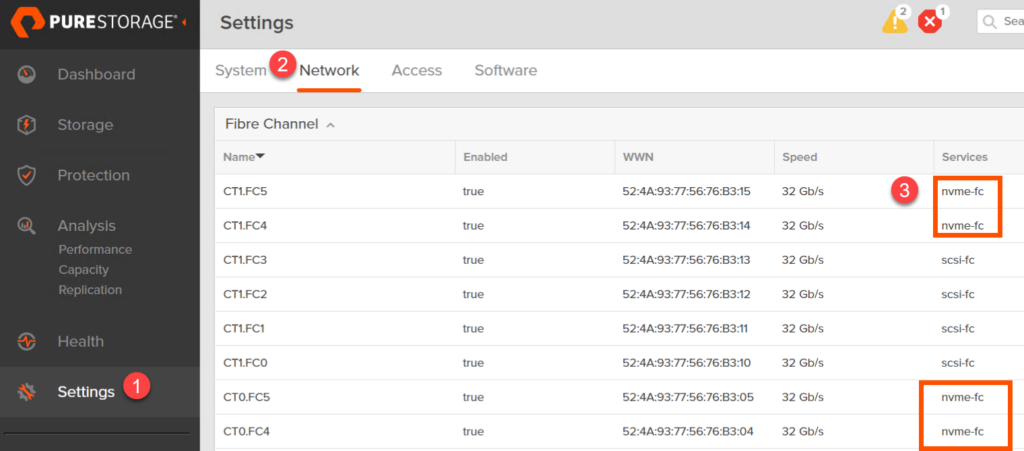

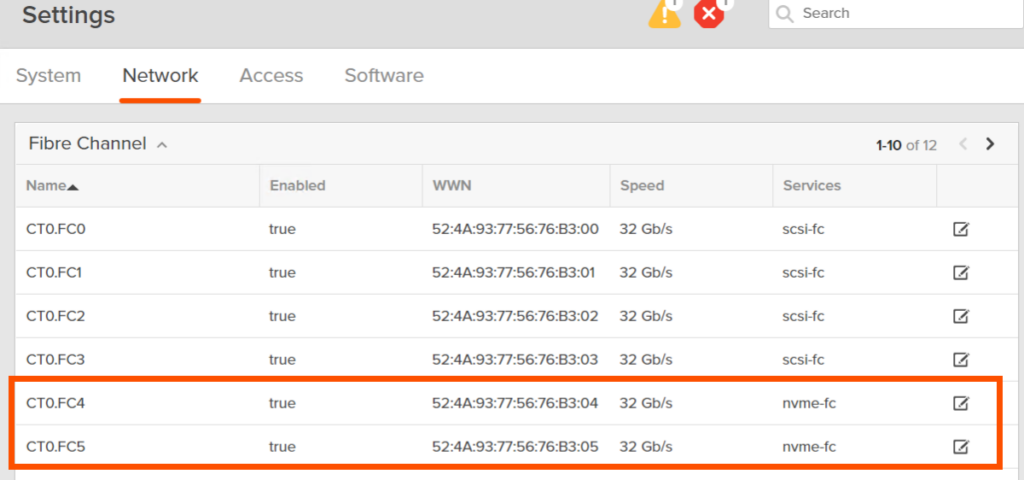

To confirm that it is enabled, login to your array and look at Settings > Network > Fibre Channel. You should see nvme-fc on the ports. Note that a given FC port is either going to be SCSI or NVMe, not both.

If you do not see this, it needs to be enabled on your FlashArray–reach out to Pure support to have this done.

ESXi Host Configuration

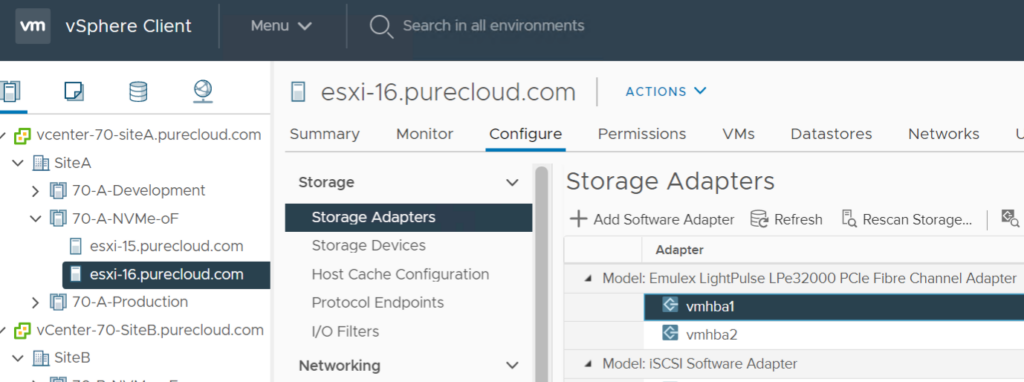

The first configuration step is to enabled NVMe-oF on your ESXi host(s). In my environment, I have a cluster that has two hosts, each with supported NVMe-oF/FC HBAs:

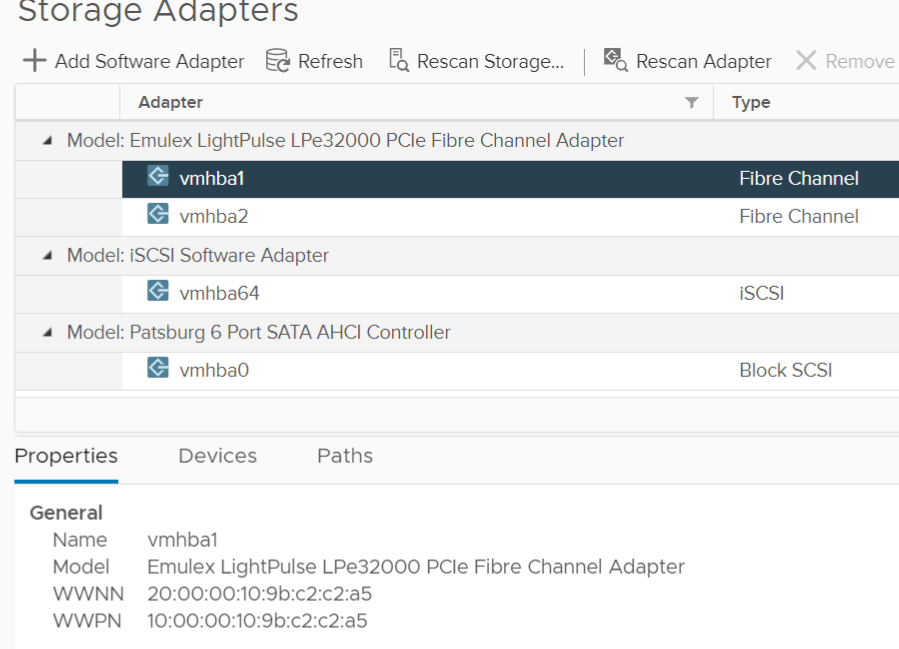

With some earlier NVMe HBA drivers, when you click on Storage Adapters there will be no listing for controllers, namespaces, etc. This is because the NVMe “feature” driver is not enabled by default.

With newer versions of certain adapters the NVMe feature is enabled by default, such as with Emulex cards with version 12.8 or later. So in that case the following step is not necessary.

For versions that do not have it enabled by default, this change cannot be done in the vSphere Client, but instead only through cmdline or a tool that can make esxcli calls such as PowerCLI.

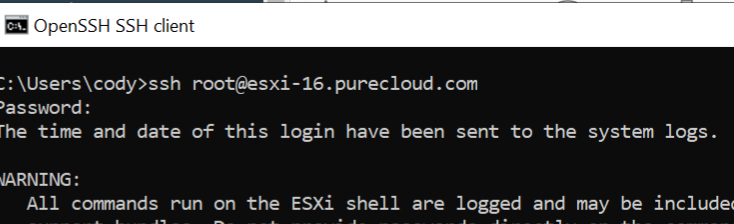

SSH into the host(s).

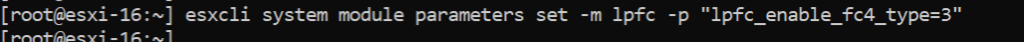

Now run the following command to enable this feature:

esxcli system module parameters set -m lpfc -p "lpfc_enable_fc4_type=3"

esxcfg-module -s "ql2xnvmesupport=1" qlnativefc

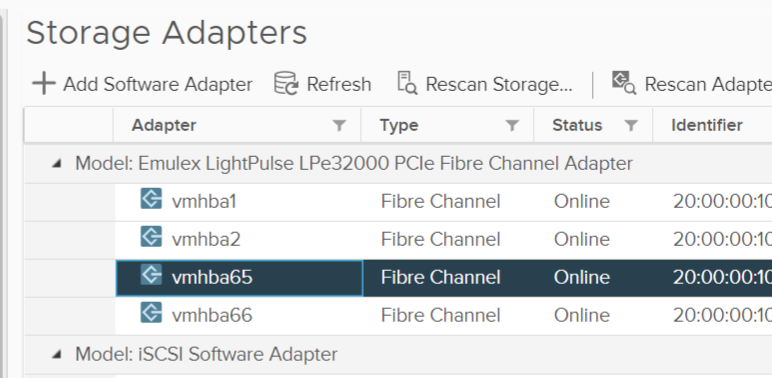

Then reboot the host. Repeat this on each host in the cluster. Once the host has come back up, navigate back to the storage adapters. You will see new adapters added (one for each port), which will likely be something like vmhba65 and so on.

Once you click on one, you will see more information appear in the details panel:

If your zoning is complete at this point (I don’t cover that here–but it is the same with SCSI-FC and even the WWNs are the same on both sides), the controllers will automatically appear. Unlike, RoCE, you do not need to manually configure the controllers–they auto-discover. So if you do not see anything listed here, ensure zoning is complete.

You can see this event in the vmkernel log on the ESXi host (/var/log/)

2021-01-16T01:29:10.112Z cpu22:2098492)NVMFDEV:302 Adding new controller nqn.2010-06.com.purestorage:flasharray.6d122f70cac7d785 to active list 2021-01-16T01:29:10.112Z cpu22:2098492)NVMEDEV:2895 disabling controller… 2021-01-16T01:29:10.112Z cpu22:2098492)NVMEDEV:2904 enabling controller… 2021-01-16T01:29:10.112Z cpu22:2098492)NVMEDEV:877 Ctlr 257, queue 0, update queue size to 31 2021-01-16T01:29:10.112Z cpu22:2098492)NVMEDEV:2912 reading version register… 2021-01-16T01:29:10.112Z cpu22:2098492)NVMEDEV:2926 get controller identify data… 2021-01-16T01:29:10.112Z cpu22:2098492)NVMEDEV:5101 Controller 257, name nqn.2010-06.com.purestorage:flasharray.6d122f70cac7d785#vmhba65#524a93775676b304:524a93775676b304 2021-01-16T01:29:10.112Z cpu22:2098492)NVMEDEV:4991 Ctlr(257) nqn.2010-06.com.purestorage:flasharray.6d122f70cac7d785#vmhba65#524a93775676b304:524a93775676b304 got slot 0 2021-01-16T01:29:10.112Z cpu22:2098492)NVMEDEV:6156 Succeeded to create recovery world for controller 257

The next step is to create the host object on the FlashArray. In NVMe-oF, initiators use something called an NVMe Qualified Name (NQN). The initiator has one and so does the target (the FlashArray). With NVMe-oF/FC, NQNs do not replace FC WWNs–they both exist. The WWN of each side is what is advertised on the FC layer to enable physical connectivity and zoning. The NQN is what enables the NVMe layer to communicate to the correct endpoints on the FC fabric. You can look at it in a similar way as networking in IP (MAC addresses and IPs).

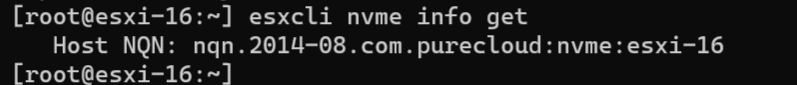

So, connect at the physical layer (WWN zoning), then the logical layer (NQNs). For each ESXi host, you need to create a host object on the FlashArray, then add the NQN to it. So where do you get the NQN? Well, not from the vSphere Client today, you need to use esxcli for now. So SSH back into the ESXi host and run:

esxcli nvme info get

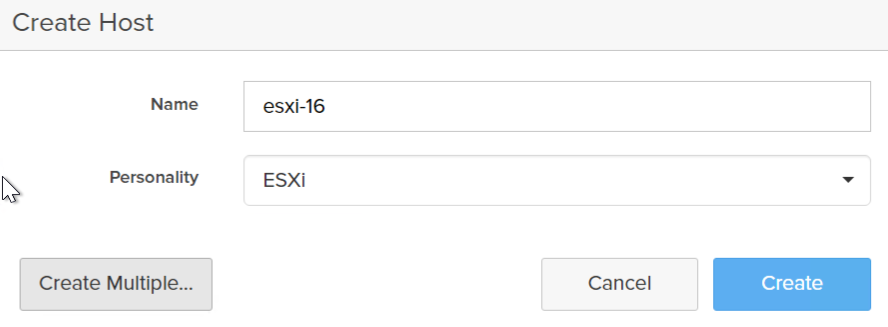

Copy the NQN, then log into the FlashArray. Create a host for the ESXi host (if one already exists for FC, create a new FA host object).

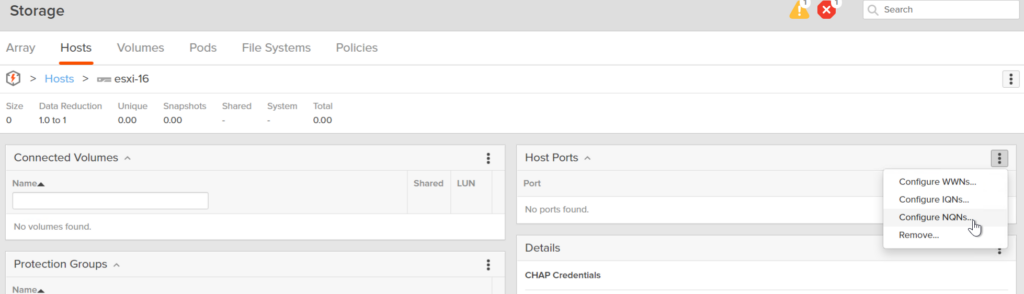

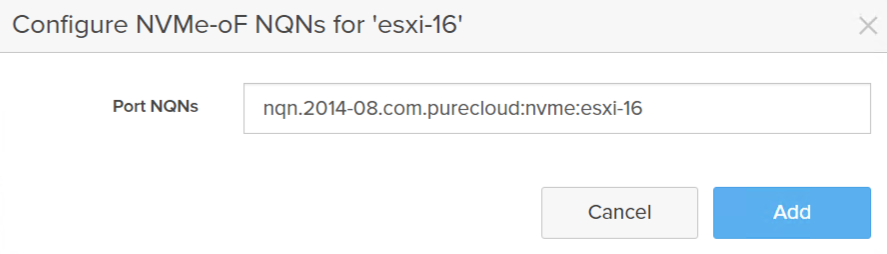

Give the host a name, and choose the ESXi personality. Next navigate to that new host and click on the vertical ellipsis and choose Configure NQNs.

Add that NQN to the input box and click Add.

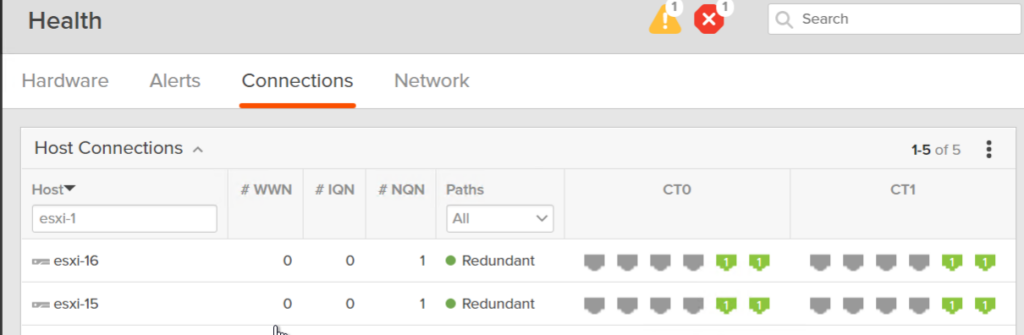

Assuming your zoning is done, flip over to the Health screen, followed by Connections. In the Host Connections panel, find your new host(s) and ensure they are redundantly connected to the array (both controllers) and each controller (more than one port).

If they are not, ensure zoning and/or cabling is correct. If you have not zoned yet, you can find the NVMe-oF/FC WWNs on the Settings > Network > Fibre Channel panel.

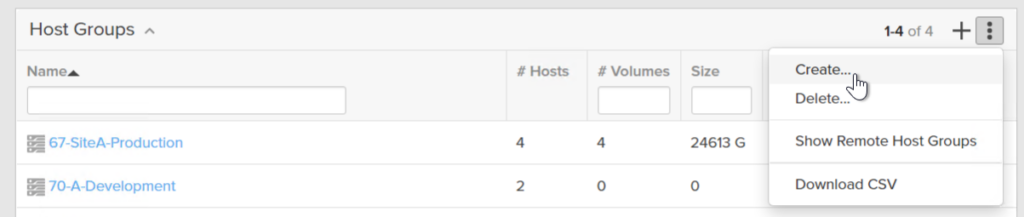

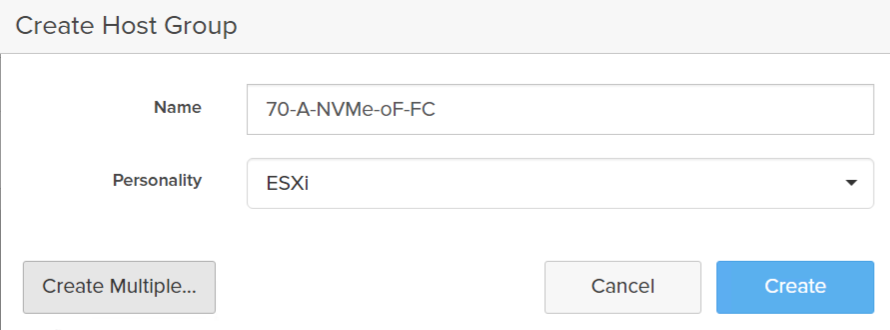

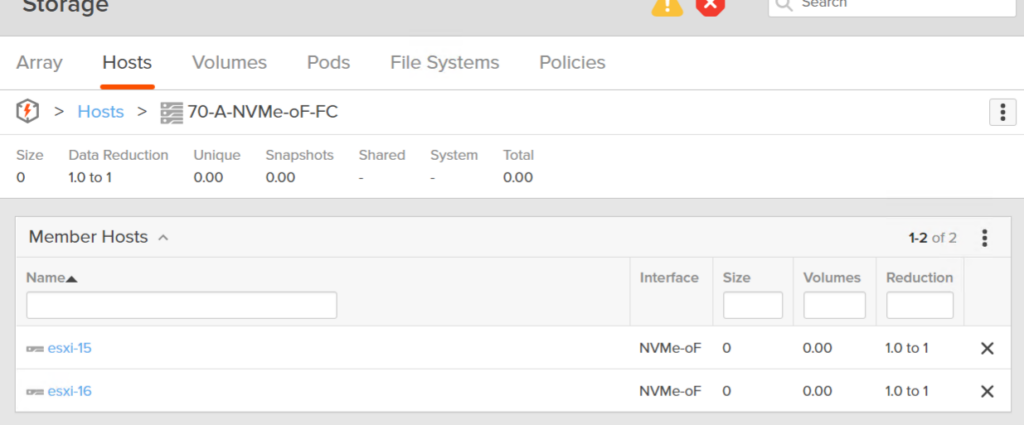

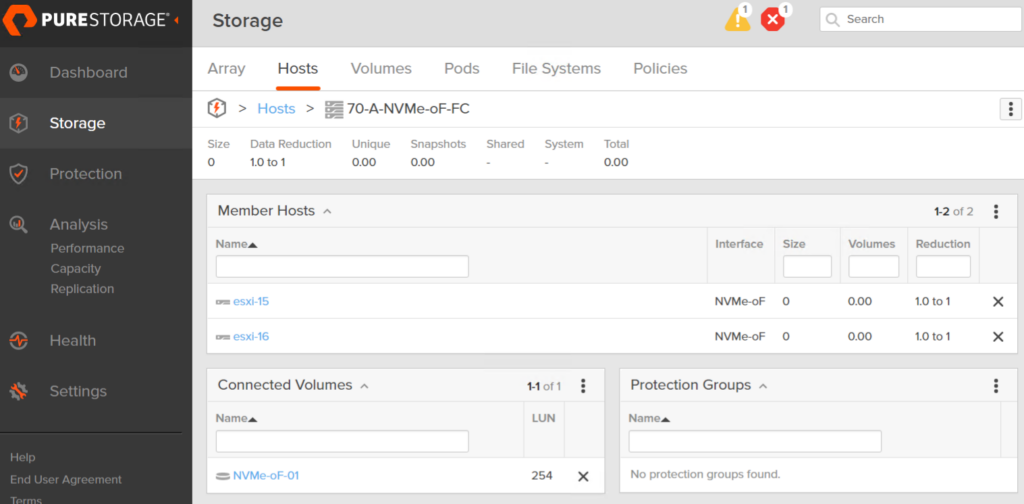

Now create a new host group and add all of those hosts into it:

Give it a name that makes sense for the cluster:

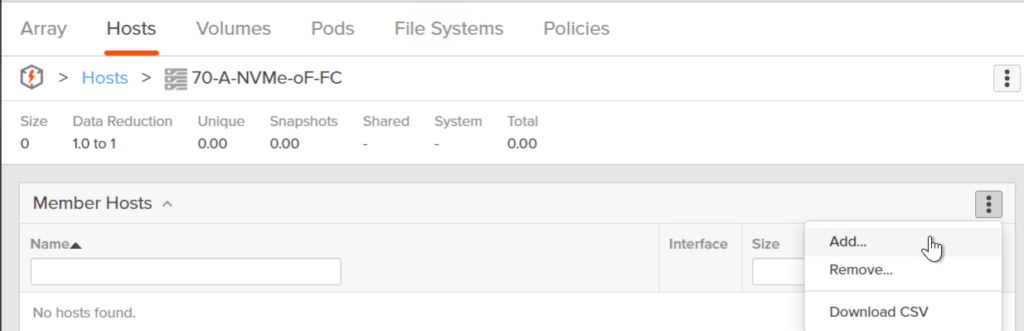

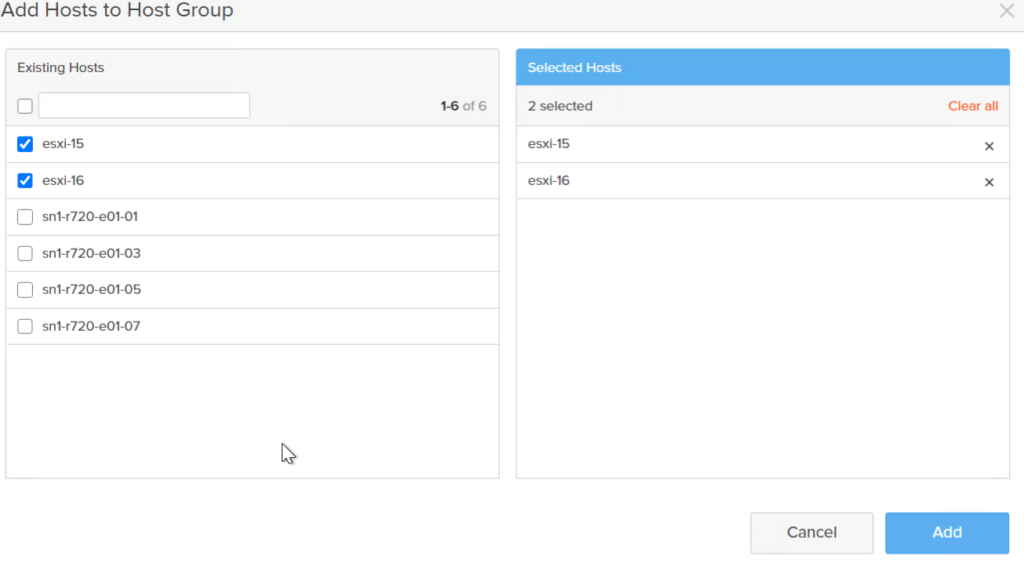

Then add the hosts:

Choose each in the cluster and click Add.

Now you can provision storage!

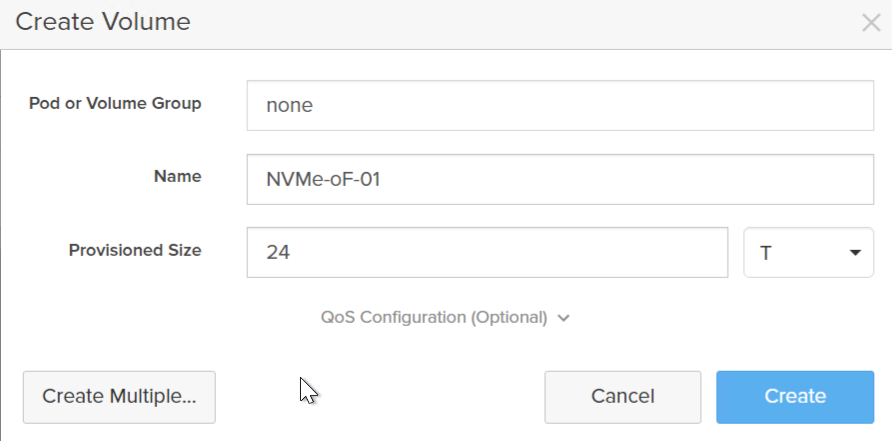

There is no difference on the FlashArray between provisioning with SCSI or NVMe:

- Create a volume

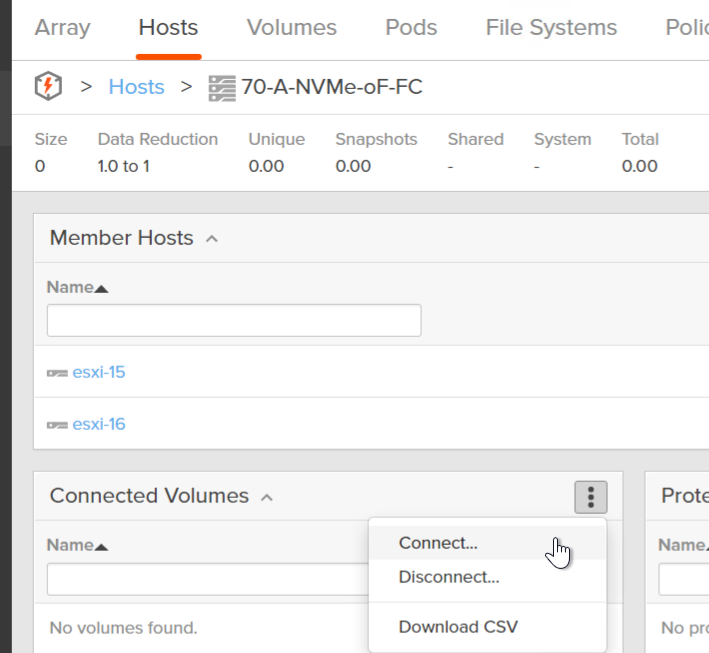

- Connect to the host group

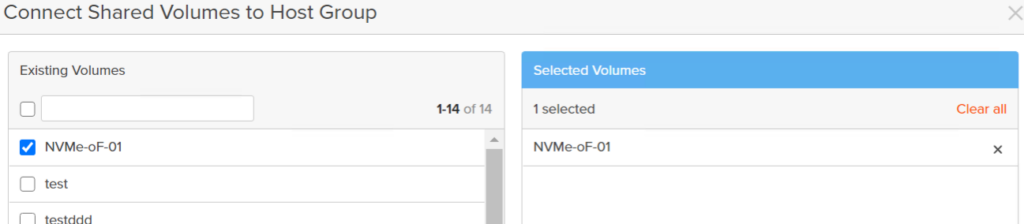

- Then connect:

The volume is now connected.

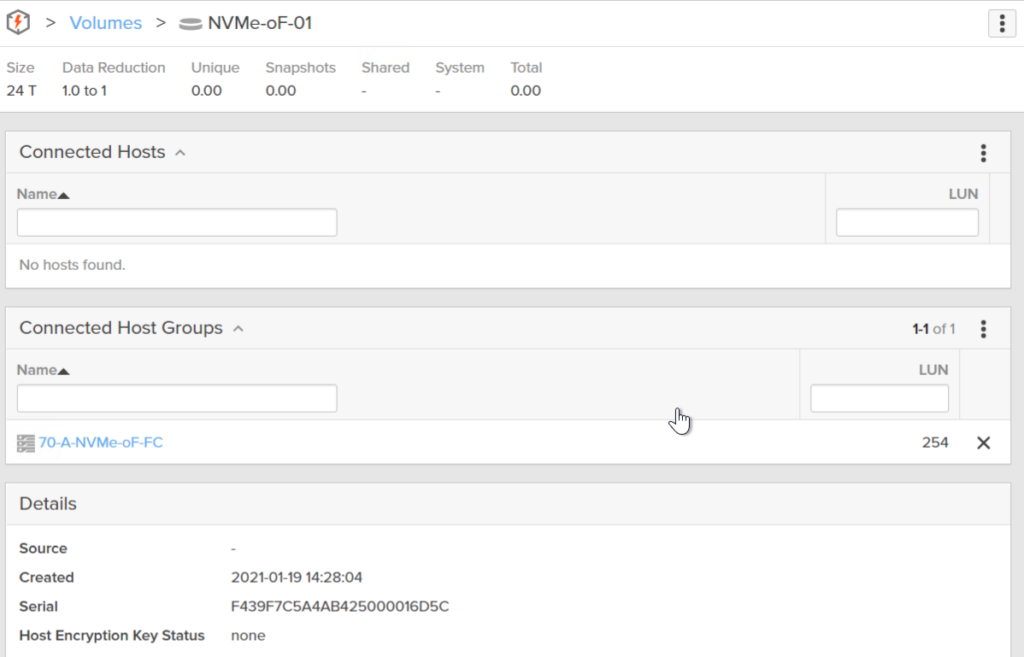

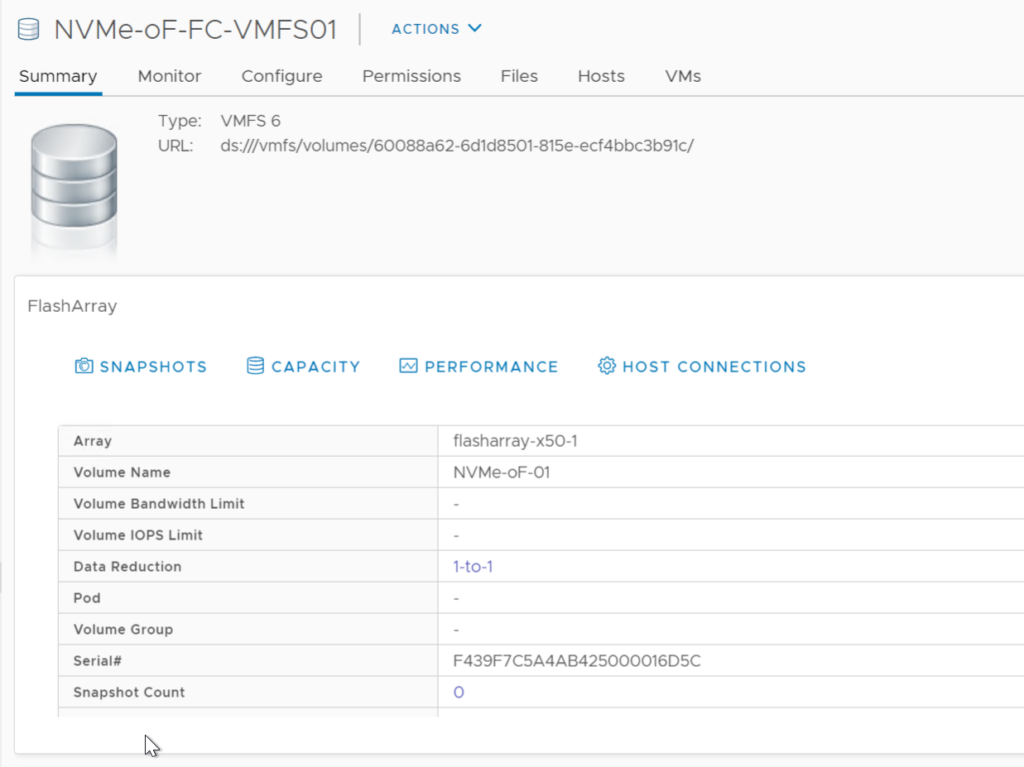

If you look at the volume there is a serial number:

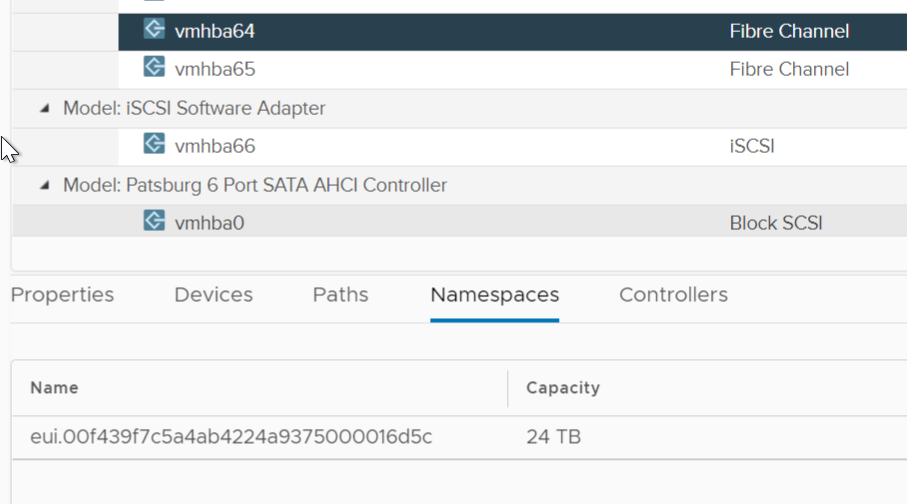

In vSphere you will see that appear as a namespace:

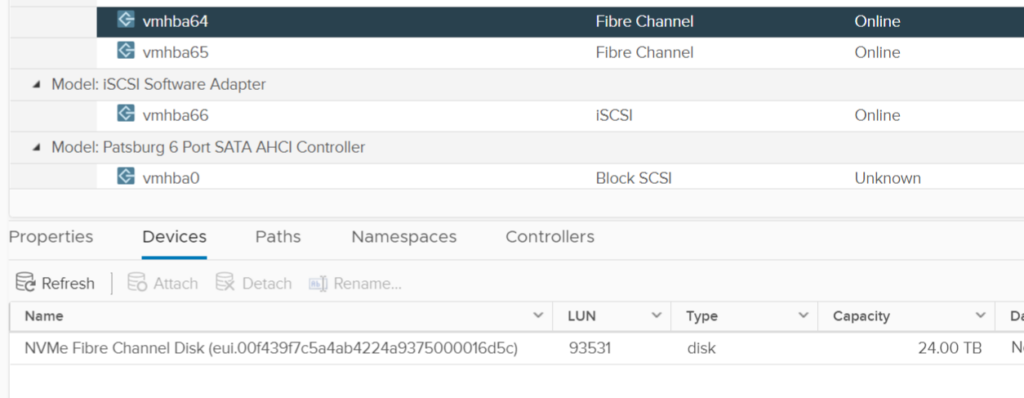

The extended unique identified (EUI) will mirror the serial number (with leading zeros). It will also appear in the device listing under the adapter:

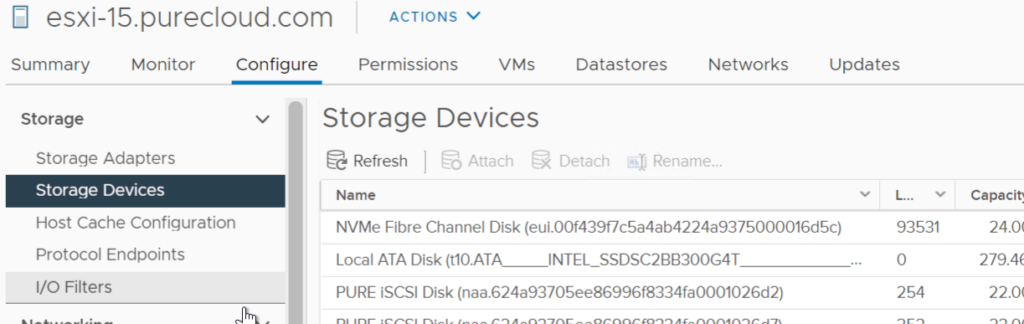

It will also appear under the standard storage device pane as well:

NVMe devices can be distinguished via the name (and the wild LUN ID which I will talk about in another post).

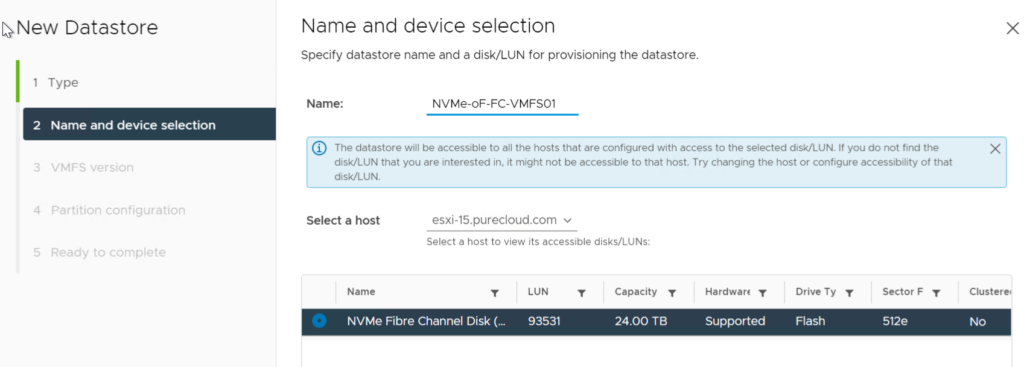

This device can now be used to create VMFS datastores.

Our vSphere Plugin currently cannot provision NVMe-oF datastores, but the summary widget, performance charting, capacity metrics, all work. The snapshot feature is half featured (create, list snapshots work. Restore from does not currently work).

Updating our plugin to fill in NVMe holes is planned.

A few notes:

- RDMs are not currently supported with NVMe. VMFS only.

- XCOPY is currently not supported with NVMe-oF. So VM clone times will not be what they are on SCSI-based VMFS

- Other limitations and points can be seen here: https://docs.vmware.com/en/VMware-vSphere/7.0/com.vmware.vsphere.storage.doc/GUID-9AEE5F4D-0CB8-4355-BF89-BB61C5F30C70.html

Hi Cody

Thanks for the great blogs.

I work with VMware and I wanted to know whether Pure array supports WEAR reservations on NVMe-FC? At least 6.1.0 does not.

We need the support for a critical vSphere feature.

how about show how to do the purity the CLI way?