Let me start this post off with saying that the “What’s new in vSphere 6.5 Storage” white paper has been officially published and can be read here:

https://storagehub.vmware.com/#!/vsphere-core-storage/

I had the distinct pleasure of helping Cormac and Paudie with this paper. Thanks to both of them for including me and providing me with access to the engineers who wrote these features/enhancements!

So anyways, read that document for a high level of all of the new features and enhancements. Previously, I have written two posts in this series:

What’s new in ESXi 6.5 Storage Part I: UNMAP

What’s new in ESXi 6.5 Storage Part II: Resignaturing

This is a short post, mainly wanted to share the white paper, but it is important to note that VMware is still marching forward with improving VMFS and virtual disk flexibility. So I wanted to highlight a new enhancement. Thin virtual disk hot extension.

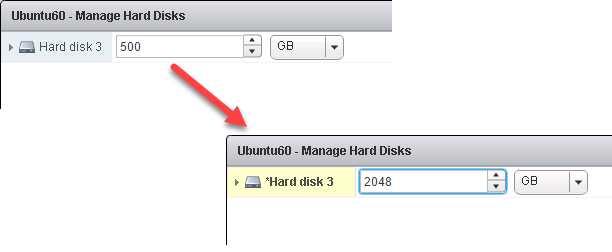

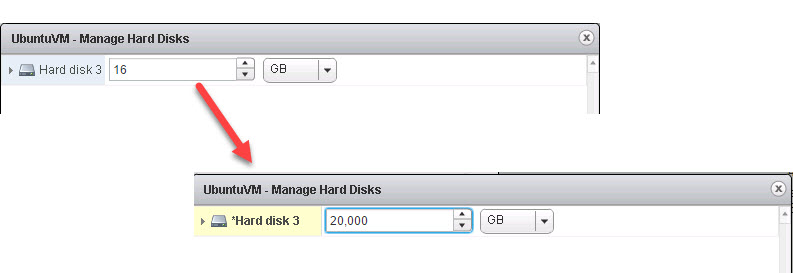

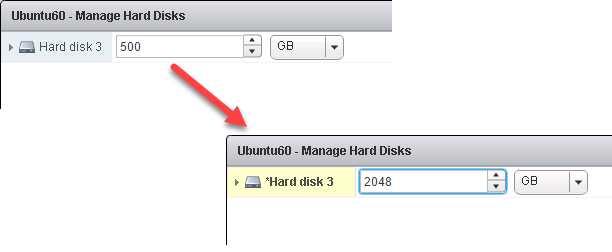

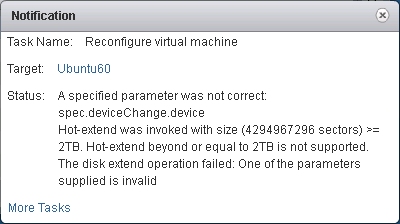

Prior to vSphere 6.5, thin virtual disks could be hot extended, but there were limits. The main one being if the extend operation brought the VMDK size to larger than 2 TB (or the VMDK was already 2 TB) the operation was not permitted:

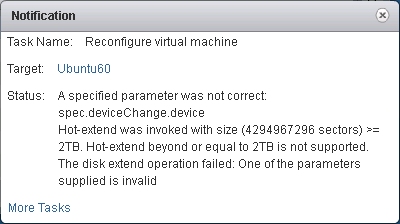

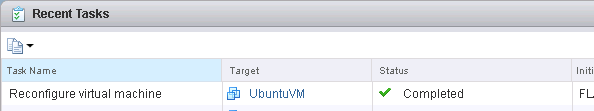

If the VM is turned on and I try to apply this configuration change, I get an error:

If the VM is turned on and I try to apply this configuration change, I get an error:

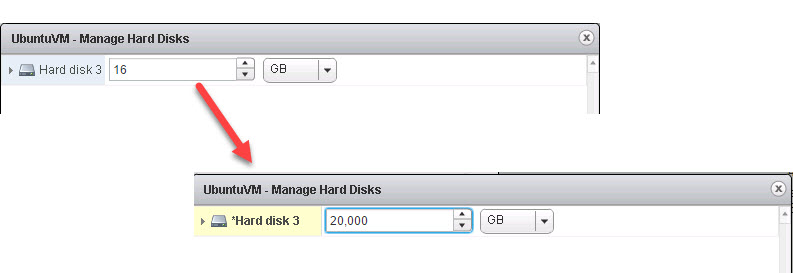

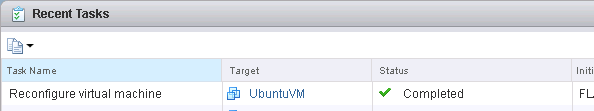

So this is fixed in vSphere 6.5! And the nice thing is that it does not require either VMFS 6 or the latest version of virtual machine hardware. Just hosting the VM on a 6.5 host will provide this functionality:

Sweet! But this really just re-enforces my thought that there are few remaining reasons to not use thin virtual disks with the latest releases of vSphere. So much more flexible and a lot of engineering is going into them to make them better. Not much work is being done on thick-type virtual disks. Look for an upcoming blog on some performance enhancements as well.