A few weeks ago, VMware released the latest version of VMware Cloud Foundation, version 3.9. There were more than a few things in this release but one enhancement was around Fibre Channel:

The release notes mention:

Fibre Channel Storage as Principal Storage: Virtual Infrastructure (VI) workload domains now support Fibre Channel as a principal storage option in addition to VMware vSAN and NFS.

vCF 3.9 Release Notes

So this leads to three questions:

- What does this mean?

- What does this mean for non-FC storage?

- What was the stance around FC storage BEFORE this release?

Let’s answer the first question first.

VCF is deployed and managed through something called SDDC Manager. This is what you use to deploy new environments, manage upgrades, deploy vRealize etc. This is a bit hand-wavy, but SDDC Manager operates upon two types of what they refer to as “domains”. A management domain and workload domains.

For a given VCF deployment, there is one and only one management domain. This is where vCenters are deployed, vRealize, NSX management and other supporting applications that run your overall infrastructure. This is deployed entirely onto vSAN at first.

Workload Domains

Once deployed you can then deploy one or more workload domains. A workload domain is a new vCenter (plus an NSX edge appliance). Deploying a new VCF workload domain requires the ESXi hosts be ready (ESXi installed and on the network). VCF then deploys vCenter, adds the hosts to it and configures NSX for that new vCenter/cluster.

When deploying a new workload domain, you must choose (in SDDC Manager) a storage type. Prior to VCF 3.9, the only options were vSAN or NFS. So basically the target ESXi hosts need to be either vSAN ready nodes OR already have at least one NFS datastore mounted to the hosts.

So if you wanted to use external block as your primary option, you needed have one of these options and then add block later (more on that in a bit).

So what is new in VCF 3.9 then? Well there is a new option in the SDDC Manager:

What this means is that requirement to have at least vSAN or NFS ready to deploy a workload domain is gone.You can choose VMFS on FC and then input a pre-provisioned FC-based VMFS datastore.

So prior to deploying the workload domain, you must provision and connect a VMFS datastore via FC. So array configuration/zoning/creation of VMFS etc must be done first.

So how do you do that? Well buyers choice I suppose. Use our GUI, our REST, our CLI or depending on your underlying infrastructure we have other options. Though a lot of standard integrations (vRO, vSphere Plugin, PowerShell) are built around vCenter, so if I want to automate this what do I do?

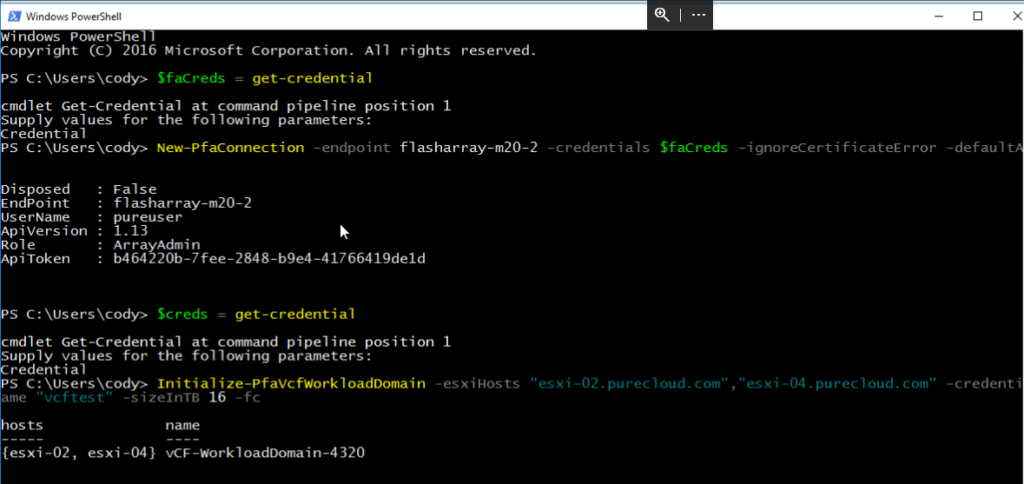

Well I have added a new PowerShell cmdlet to do this! Though doesn’t PowerShell (read: PowerCLI) require vCenter? No, it does not. Now our standard PowerCLI module is focused on connecting to vCenter, but it doesn’t have to be. I have added a new cmdlet called Initialize-PfavCfWorkloadDomain. This cmdlet:

- Takes in a comma separated list of ESXi FQDNs/IPs, their credentials, a datastore name, a size, and a FlashArray connection

- Connects directly to each ESXi host directly, gets their FC WWNs, creates a host object on the FlashArray for each

- Creates a host group and adds each host

- Creates a new volume of the specified size

- Rescans one ESXi host and formats it with VMFS

- Rescans the remaining hosts

- Disconnects from the hosts

- If any step fails it will clean up anything it did

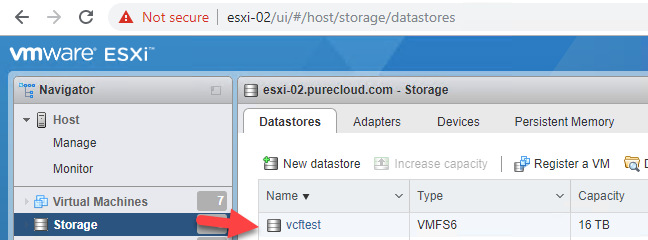

So if you log into one of those ESXi hosts you will see the datastore:

Then go ahead and deploy the workload domain with that datastore.

$faCreds = get-credential New-PfaConnection -endpoint <FlashArray FQDN/IP> -credentials $faCreds -ignoreCertificateError -defaultArray $creds = get-credential Initialize-PfaVcfWorkloadDomain -esxiHosts <array of FQDNs> -credentials $creds -datastoreName <datastore name> -sizeInTB <datastore size> -fc

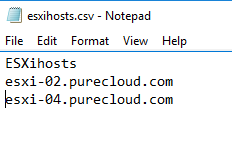

You can also use a CSV if you have a lot of hosts:

Give the CSV a header and replace that with mine (if it is different): ESXiHosts then list each FQDN/IP with carriage returns between each.

$allHosts = @()

Import-Csv C:\hostList.csv | ForEach-Object {$allHosts += $_.ESXiHosts}

Initialize-PfaVcfWorkloadDomain -esxiHosts $allHosts -credentials $creds -datastoreName "vcftest" -sizeInTB 16 -fc

So feel free to use it! This is included in the module version 1.2.5.0 or later.

So why just FC? Why just VMFS? Well first things first. FC requires the the least host-side configuration (done at the switch layer), iSCSI requires network configuration on the host. So the ideal scenario for iSCSI for instance, is to include that setup with the NSX automation–which requires a bit more work in SDDC manager. Furthermore, (I believe) FC was asked for more over iSCSI, though that is mostly anecdotal on my part.

Why not vVols? Well vVols is vastly superior when it comes to automation/integration as compared to VMFS. So it seems to be the right route. Well it is, but the advantage of vVols is that is reduces the need to have you pre-provision storage, but it does require a bit more work to integrate all of those workflows. So while it is better in the long run, the VMFS on FC was lower hanging fruit here. So this is the first step. I can’t get into roadmap here, but I expect this is not the last thing you will see when it comes to external block in general with VCF/SDDC manager.

Non-FC-VMFS Storage

So what about iSCSI? What about vVols? Can I use them with VCF? YES. You can. You can deploy a workload domain with one of the existing options (vSAN, NFS, FC-VMFS) then once deployed, you can configure iSCSI, and provision VMFS with it, or vVols (with FC or iSCSI).

So if you want iSCSI, probably the best option is to provision a basic NFS datastore (like a Linux/Windows VM or whatever) then configure iSCSI afterwards and then provision vVols or VMFS (or both).

If you want vVols on FC, then the simplest option is to provision a VMFS over FC then add vVols after deployment.

If your hosts are vSAN capable then that is probably the right route (because why not at that point).

What about BEFORE 3.9?

This has been a point of confusion. VCF has two terms for storage. Primary and supplemental. The definitions of these basically refers to whether SDDC Manager is aware of it. SDDC Manager is aware of whatever you choose when deploying a workload domain. So vSAN, NFS, or FC-VMFS. So in VCF 3.9, those are the three options for primary storage. Before that, only vSAN or NFS. Anything else, something deployed AFTER the workload domain is seen as supplemental storage. This does not define its performance or availability, but just how SDDC Manager sees it (or in fact doesn’t see it).

How it primary storage used? Well really only for detection of initial storage during deployment and then for placement of the NSX edge appliance. Anything else can go on the supplemental storage (you can technically even Storage vMotion the edge appliance post-deployment if you want).

So any certified storage with your version of ESXi is supported as supplemental storage. This has been true with VCF for awhile. So once VCF deploys the workload domain, you can provision storage as normal and use your standard integration with that storage as you would “normally”.

You can see this in the VCF FAQ (and other places):

Q. Can I use Fibre Channel (FC) SAN with Cloud Foundation?

A. Yes. You can add the FC datastores as supplemental storage to the Virtual Infrastructure (VI) workload domains. The FC storage must be managed independently from the workload domain vCenter instance. Check with your storage vendor for a list of support storage arrays. With VCF Release

VCF FAQ

Q. Can I use Network Attached Storage (NAS) with Cloud Foundation?

A. Yes, you can create VI workload domains with external NFS storage. iSCSI storage can be connected manually as supplemental storage to a workload domain.

VCF FAQ

Stay tuned on this! We are working closely with VMware and vCF is a very important solution for us at Pure, so this will be a particularly important focus for us in 2020 and beyond.

More info:

VCF Blogs:

FC with VMFS Update:

VCF KB Articles (Narrated demo videos are at the bottom of each):

FC with VMFS

iSCSI

Thanks for the info, Cody. Does it mean that all the hosts which are part of the Workload Domain in VCF need to have an FC HBA as well? And that they should be connected to a pair of FC switches and in turn connect to storage processors on an FC array? And if I understand correctly,” VMFS on FC” can only be selected independently of VSAN. Or can they work together at a later point? I’m just trying to get a better understanding since this feature wasn’t available till now.

Yes. If you choose FC-VMFS as your primary storage for a WD, all hosts should have a FC HBA. It is certainly a best practice to do so as well. As far as switching etc, that is not really required, you could do direct connect, but that would be limited to how many hosts can connect to your array. They can certainly work together as well. If you have vSAN, I would probably choose that as the primary in SDDC manager (so it can configure it for you) and then add FC as supplemental, but the opposite is a choice too. All options are available as supplemental at this point.