With the introduction of our Active-Active Synchronous Replication (called ActiveCluster) I have been getting more and more questions around multiple-subnet iSCSI access. Some customers have their two arrays in different datacenters, and also different subnets (no stretched layer 2).

With ActiveCluster, a volume exists on both arrays, so essentially the iSCSI targets on the 2nd array just look like additional paths to that volume–as far as the host knows it is not a two arrays, it just has more paths.

Consequently, this discussion is the same as if you happen to have a single array using more than one subnet for its iSCSI targets or if you are using active-active across two arrays.

Though there are some different considerations which I will talk about later.

First off, should you use more than one subnet? Well keeping things simple is good, and for a single FlashArray I would probably do that. Chris Wahl wrote a great post on this awhile back that explains the ins and out of this well:

http://wahlnetwork.com/2015/03/09/when-to-use-multiple-subnet-iscsi-network-design/

But in an active-active situation, where arrays are in different datacenters, you might not have the ability to stretch your layer 2. So let’s run on that assumption and walk through what it looks like.

Software iSCSI Port Binding

Let me define first what “iSCSI port binding” is. When you use the Software iSCSI adapter in ESXi, the default option is that it is not tied to any particular vmkernel port (and therefore physical NIC).

In this situation. when iSCSI sessions need to be created to a target array, the software iSCSI adapter will use the ESXi IP routing table to figure out what vmkernel port to use.

Oh the iSCSI target is 10.21.88.152?

Use vmk3 to create the iSCSI sessions.

The problem here is that it only uses the first vmkernel port it finds. So it will not multipath internally to the host. Yes there are multiple paths to the array, but that is just based on the number of targets on the array. So you are limited to the bandwidth from the single NIC on the ESXi host, so you likely cannot take advantage of the multiple iSCSI target concurrency the array offers.

Port Binding, however, allows you to associate one or more vmkernel adapters to the Software iSCSI adapter directly. This then tells ESXi to create iSCSI sessions on all of the associate vmkernel adapters, and therefore physical NICs. This allows you to use more than one physical NIC at a time.

I wrote about setting port binding up here:

- Setting up iSCSI Port Binding with Standard vSwitches in the vSphere Web Client

- Setting up Software iSCSI Multipathing with Distributed vSwitches with the vSphere Web Client

See a blog post I wrote about the differences in detail here:

Another look at ESXi iSCSI Multipathing (or a Lack Thereof)

So how does this change with multiple subnet-homed arrays

(or sets of arrays replicating in an active-active fashion)?

Let’s run through some scenarios, but first let’s look at my environment.

My Environment

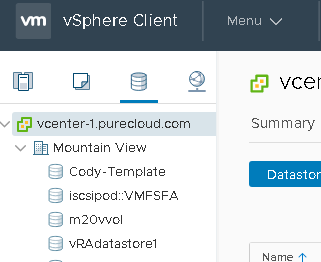

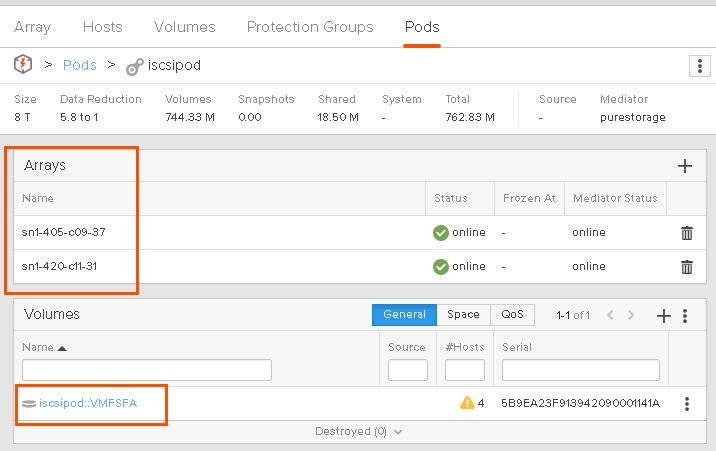

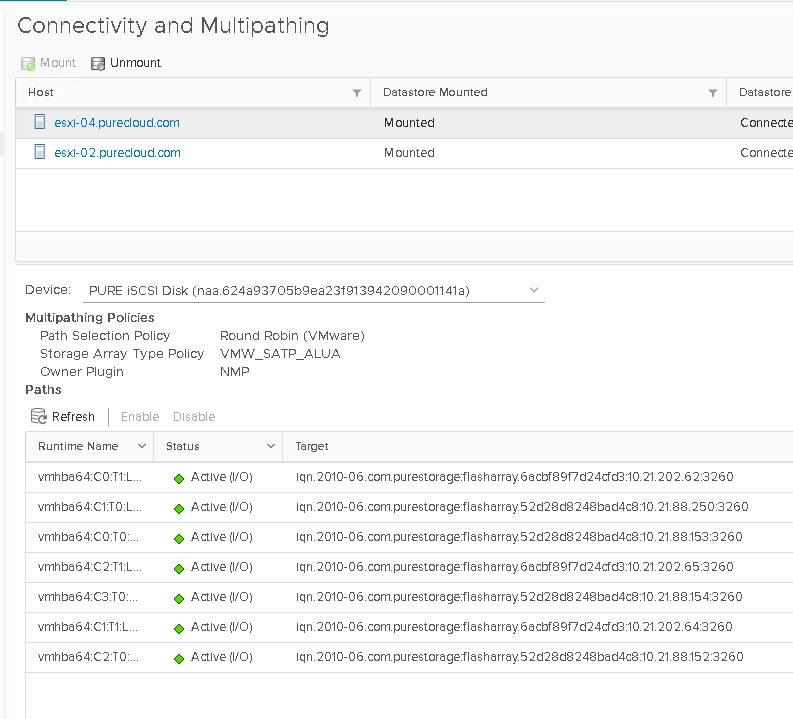

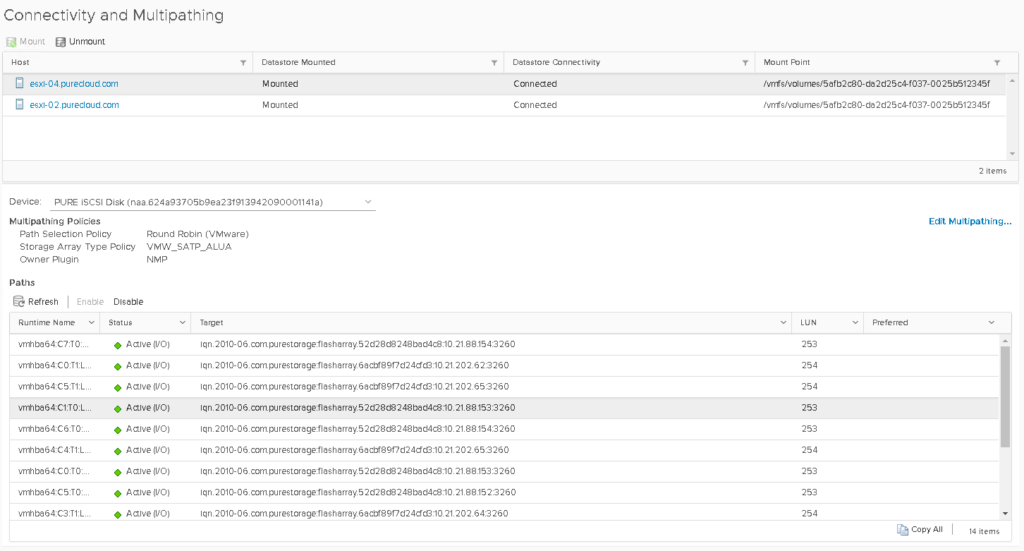

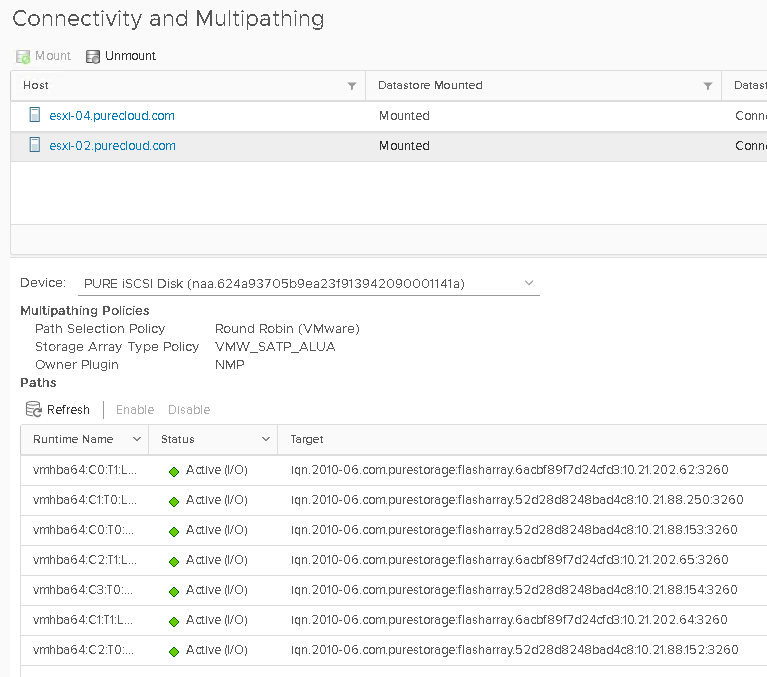

I have a VMFS datastore (called iscsipod::VMFSFA) that is on a FlashArray volume replicated with ActiveCluster so it is available on both of my arrays simultaneously.

As you can see it is hosted by two arrays:

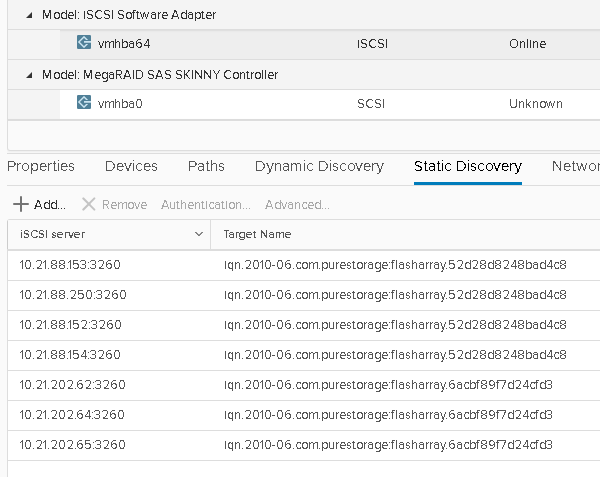

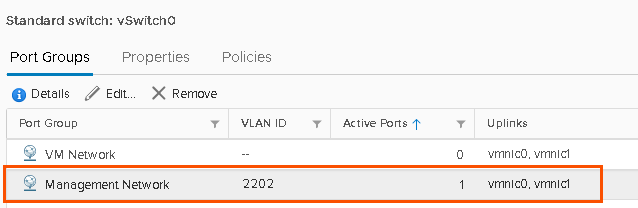

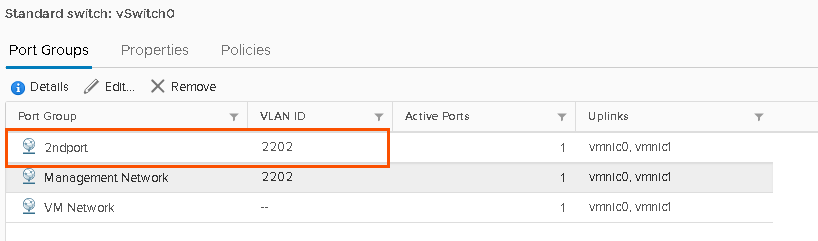

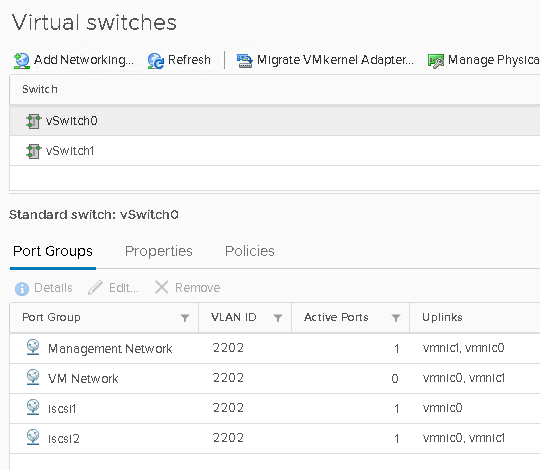

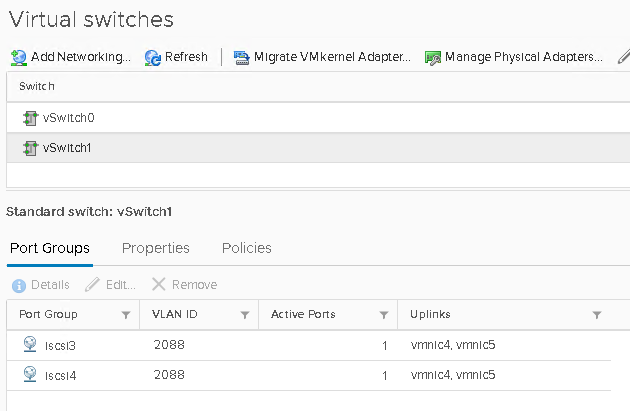

My first array is on VLAN 2202 (10.21.202.x) and my second array is on VLAN 2088 (10.21.88.x).

I have 4 iSCSI targets on one array and 3 on the other array for a total of 7 targets.

Note that some of those iSCSI targets are on my first array (10.21.202.x) and some of the targets are on my second array (10.21.88.x).

Now let’s walk through some scenarios.

The first situation is that my source host is only on one subnet and my target arrays are each using a different one (in the case of ActiveCluster) or I have a single array that is using two subnets by itself.

If the different subnets can route to each other, than the next two sections are relevant. The host can talk to both arrays through routing.

If the subnets are closed off and cannot route to one another, than this is a different story and your host won’t be able to communicate at all with the array/ports in a different subnet. In this case, you can pretty much just follow what is laid out in this post here:

Another look at ESXi iSCSI Multipathing (or a Lack Thereof)

No Port Binding, Single Subnet Host

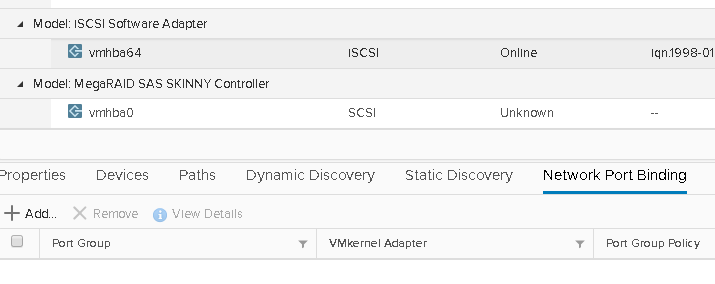

In this situation, I have no vmkernel adapters bound to my iSCSI adapter:

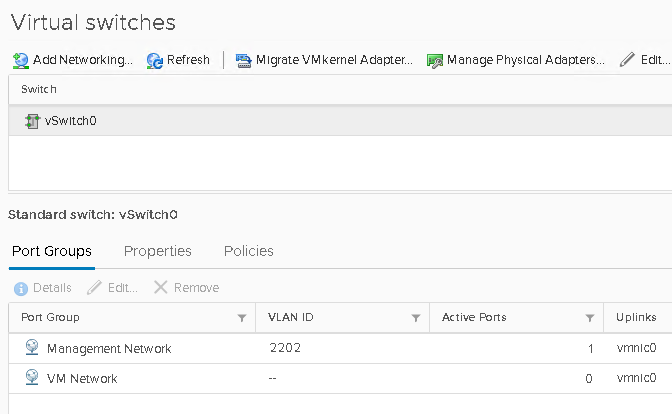

Furthermore, my host only has one vmkernel port which is on the subnet of my first array (VLAN 2022).

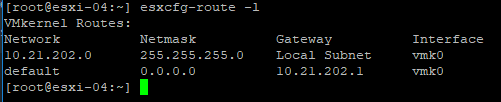

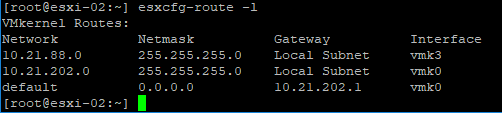

If I look at my IP routes on that host, we see yes it only has a route through vmk0:

This means all traffic will go through vmk0.

mkk0 is configured to use two different physical NICs in my vSwitch:

Which are both active:

What if I kick off a workload?

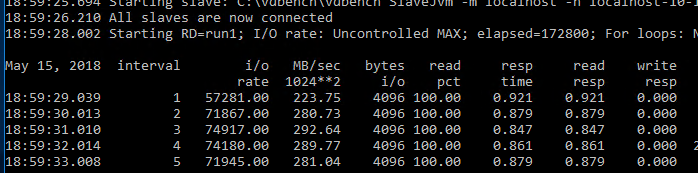

I will start one off with vdbench:

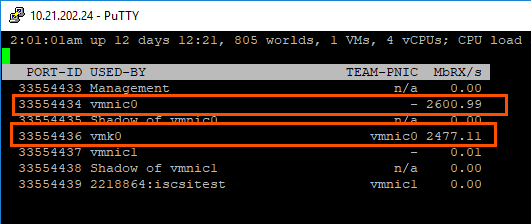

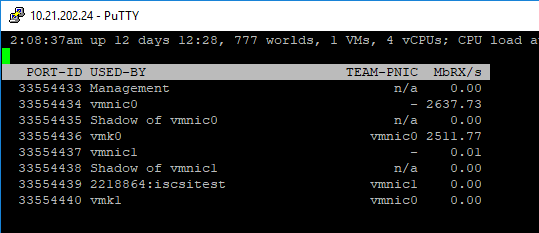

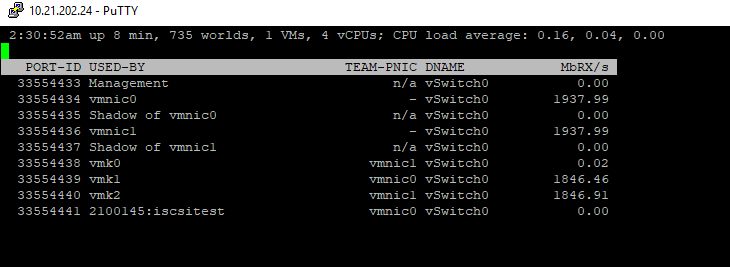

If we look at esxtop and the network statistics (ssh into ESXi, type esxtop, then type n), we see that only one physical NIC is being used:

vmk0 (vmkernel port) is being used, as expected, and so is vmnic0 (physical NIC). But vmnic1 is not being used, even though it is assigned to vmk0.

This is because ESXi only creates one session per vmkernel port and it uses the first available NIC, in this case, vmnic0.

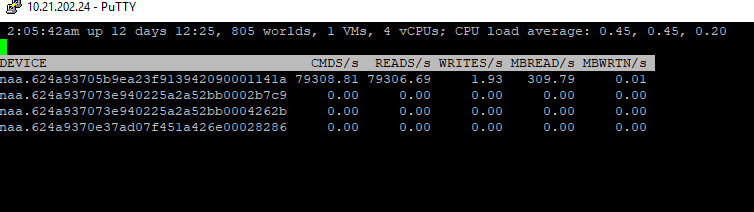

It is reporting us pushing about 2500 megabits per second, which is 312 megabytes per second. This can be proved out in esxtop by looking at the datastore:

What if I add a second vmkernel port?

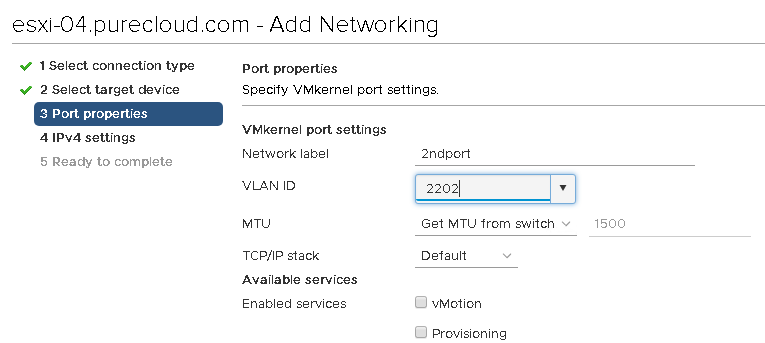

Added:

Nothing changes, as it still uses just the first vmkernel port, vmk0.

Through out all of this, the host always has 7 paths to the array for that datastore. All 7 go through that vmkernel port and that one NIC to the various iSCSI targets on my arrays.

Port Binding, Single Subnet Host

Now let’s do some port binding.

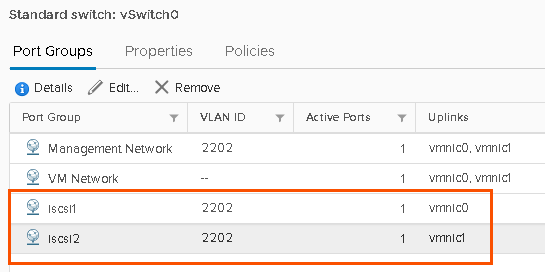

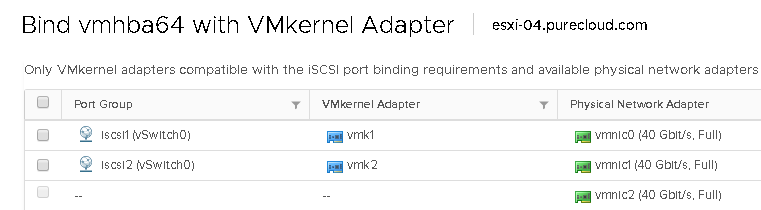

In this case I have created two vmkernel adapters, and as required by port binding, each vmkernel port only has one associated physical NIC (vmnic).

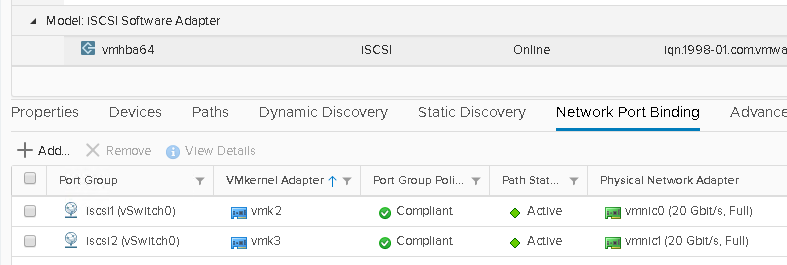

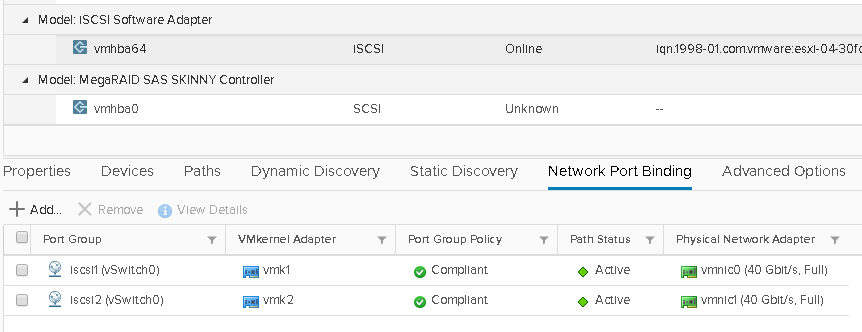

Then I bind them to the Software iSCSI adapter:

They are now bound:

So what I have done is told ESXi, “okay create iSCSI sessions through each one of these vmkernel adapters”.

One thing you will immediately note is that we now have 14 paths to my datastore:

So my path count has doubled.

This is due to the fact that the iSCSI adapter is now using both vmk0 and vmk1 and both of their respective NICs, so each vmkernel port has a path to each FlashArray iSCSI target.

Let’s kick off the workload again.

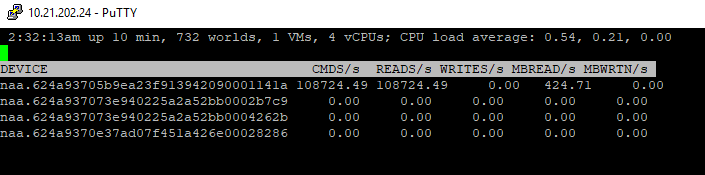

In esxtop, we can see that it is now using both vmkernel adapters and both of their physical NICs:

Furthermore, since vdbench has more resources to play with (two physical NICs) it can push more throughput:

310 MB/s to 424 MB/s.

So the benefits of porting binding can be obvious.

But are there downsides?

Yes. Maybe. Depends.

First off, you can double/triple/etc. your path count–depending on how many vmkernel adapters you add. ESXi only allows 8 paths per iSCSI datastore (this limit still exists even with vSphere 6.7), so you could potentially reach that limit (they support 32 for FC, so if you want more for iSCSI please tell VMware). Once you hit that limit, the specific paths that are dropped cannot really be predicted. So make sure you stay at 8 or lower. This is of course IF your subnets can route to one another. If they can’t then you will still only have the paths local to your host subnet.

Again, if you are running a version of ESXi prior to 6.5, routing is not supported with port binding. So in other words, I would not be able to use the paths to my 2nd array which is in a different subnet.

If I was using ESXi 6.0 and earlier, in order to use port binding to two different arrays for the same volume, I would have to stretch my layer 2 so that both arrays are on the same subnet.

Multi-subnet homed host.

What if my host does have access directly to both subnets? Meaning that it has vmkernel ports on both subnets?

In this case, my host has a vmkernel port on VLAN 2202 and 2088:

Two vmkernel ports on 2202 on vSwitch0:

And two vmkernel ports on 2088 on vSwitch1:

So I have direct access to both subnets from this host. In the case where subnets are completely segregated, this is what you would want to do. This gives you tighter control over where the traffic goes.

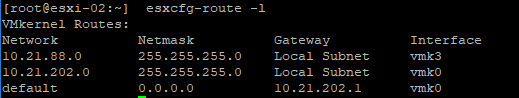

Let’s remind ourselves what the IP routing table of my single-subnet host looked like:

No specified route to 2088 (10.21.88.x). So everything goes through vmk0 (if the network allows it).

But in this host, that has vmkernel ports on both subnets, looks like so:

We now have a route for the 2088 VLAN. This changes things a bit. Especially when not using port binding. So let’s look at that first.

No Port Binding, Multi-Subnet Host

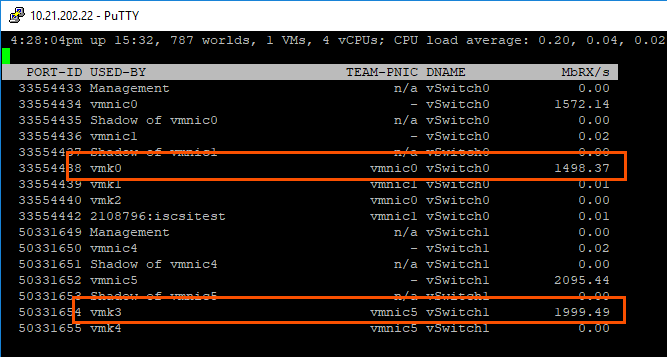

If you remember, when I didn’t use port binding, I had 7 paths (7 targets). My one vmkernel adapter (and its corresponding physical NIC) had paths to all targets. What about now?

We still have 7 paths! The difference is that these paths are going through two different vmkernel adapters. The paths on VLAN 2202 are using vmk0 and the paths on VLAN 2088 are using vmk3. So my logical paths are now spread across the two physical NICs instead of just the one. Providing more bandwidth, without increasing the logical path count. This is especially useful in ESXi version prior to 6.5 where iSCSI port binding required all vmkernel adapters to be able to connect to all iSCSI targets.

Let’s kick off a workload and prove this out.

I/O is going through both vmk0 and vmk3.

Port Binding, Multi-Subnet Host

I am not really going to spend any time on this, because it is exactly what you expect. Each bound port has paths to the target it can reach.

Thoughts

What should you do? Well in general, Chris Wahl says it best here:

http://wahlnetwork.com/2015/03/09/when-to-use-multiple-subnet-iscsi-network-design/

If your design/infrastructure enforces multiple subnets–forgoing port binding is probably the way to go. But if you can get away with one subnet, go for it and port bind. Though you definitely want to make sure that your host has vmkernel ports on both (or all) of the subnets so you can get the concurrency of all of the NICs. Especially if the different subnets cannot route to one another.

Hey Cody,

I run a smaller shop (around ~30 hosts and ~1,000 VMs) and it’s hard to imagine a situation where i’d want my SANs on two different subnets. I guess the only thing i could imagine is a local DR situation. As in, maybe I have two rooms in a building that have redundant equipment in each, although to me that still doesn’t seem like a good idea.

I can’t see myself ever wanting to stretch a sync commit cluster across a WAN though, and if i was, there’s no way i would do a stretched layer 2. I think Ivan Pepinjak has spoke about that subject enough though to convince most folks. Thus the multi subnet seems like the only decent option. That said, I have to imagine the latency hit you would take not only for writes (100% of the time) but for the read’s during a failure situation would almost make it a non-starter for anyone that cares about performance.

100% agree, I am not a fan of this option unless you absolutely need it for environmental reasons. I didn’t even tweet this post out because I don’t want to encourage it 🙂

What would be your recommendation if you have single teaming dual port NIC only ESXi servers and a single quad port NIC storage server, all dangling off a single switch?

I have ESXi 6.5 servers that were doing okay with port binding using 4 vmk, and multitarget LUN’s (4 target x 4 IP per target which are in the same subnet with each IP on a different NIC)(lots of VLAN), which had balanced writes and mostly achieved balanced reads (link aggregation load balancing algorithm luck). But after the 6.7 upgrade, heavy writes are still balanced but heavy reads seem to completely tilt to a single storage server NIC port even though ESXTOP says the 4 vmk’s are reading in a balanced manner.

Too many paths? Target IP’s need to be in different subnets? Port binding only really works correctly when the vmk count matches the physical pNIC count (so too many vmk’s)? Something else changed in the behavior of the software iSCSI initiator?

That’s really strange that there would be a difference between read balance and write balance across ports–ESXi doesn’t really discriminate against the two for the purposes of multipathing. I do generally recommend port binding. It might make sense to use different subnets, but that generally just mostly will reduce the logical path count, not really front end balance. Could there possibly be a host that doesn’t have the multipathing set to round robin or something? The issue I can’t quite wrap my mind around is that reads and writes are behaving differently

Thank you for you really clear article.

I’m using ESXi 7.0.3.

I have a situation where I need to connect both an Equallogic PS6100 and a PowerVault ME5024.

Equallogic is connect through iSCSI switch and 2 hosts (connected to the iSCSI switch) are accessing it. Equallogic use its own subnet.

Powervault is direct attached to the 2 hosts, it use two subnets that are different from Equallogic’s subnets. I’m using two subnet because Powervault has two storage controllers with its own network cards and I need to reach both controller from the host.

Configuring Equallogic with port binding and Powervault without port binding ESXi don’t discover PowerVault devices.

Configuring only Powervault (excluding Equallogic) without port binding, ESXi discover Powervault devices.

Configuring Equallogic with port binding and Powervault with port binding ESXi correctly discover PowerVault devices.

So I am obliged to configure port binding with multiple subnets to connect to Powervault.

With this configuration virtual machines on Powervault datastores run well (high throughput and read/write). Path failover is okay.

The only thing that worries me is that in “Network Port Binding” tab, path status for Powervault port groups display “Last active”. I don’t understand the reason and nor the meaning of “Last active”.

Any help will be appreciated.

Is port-binding supported with multi-subnet? Everything I read says ‘no’, but I really don’t see the issue. I get that it’s mandatory if you have single subnet and multiple vmkernels – you have to port-bind because ESXi OS doesn’t support multi-homing (two or more VMKs on same subnet). Also does it actually offer any failover benefit?

VMware say ‘Port Binding, Multi-Subnet Host’ isn’t a legit configuration. What do people do when they want to use port binding (so VMware state must be single-subnet) AND separate switch fault domains?