This is certainly a post that has been a long time in coming. As many customers were probably aware we only supported one vVol datastore per FlashArray from the inception of our support. Unlike VMFS, this doesn’t hinder as much as one might think: they datastore can be huge (up to 8 PB), features are granular to the vVol (virtual disk), and a lot of the adoption was driven by the VMware team who didn’t often really need multiple datastores.

Sure.

Before you start arguing, of course there are reasons for this and is something we needed to do. But as with our overall design of how we implement vVols on FlashArray (and well any feature) we wanted to think through our approach and how it might affect later development. We quickly came to the conclusion that leveraging pods as storage containers made the most sense. They act as similar concept as a vVol datastore does–provide feature control, a namespace, capacity tracking, etc. And more as we continue to develop them. Purposing these constructs on the array makes array management simpler: less custom objects, less repeated work, etc.

This is new in Purity 6.4.1.

Let’s walk through how it works.

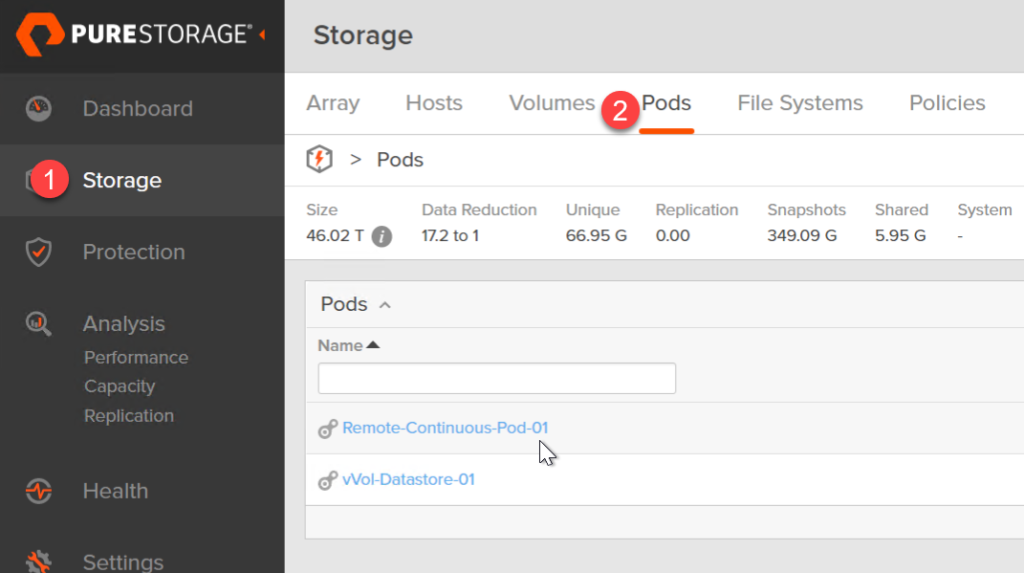

First login to a FlashArray and create a pod.

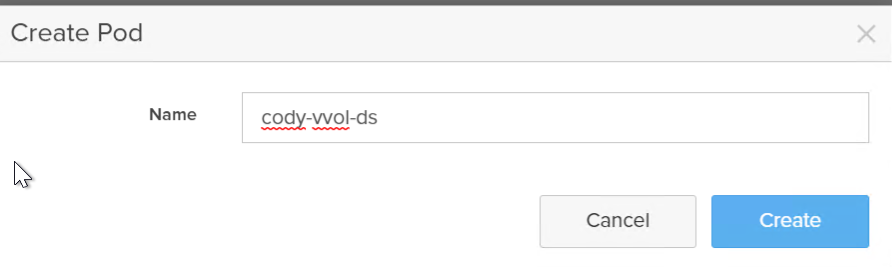

Create one and give it a name.

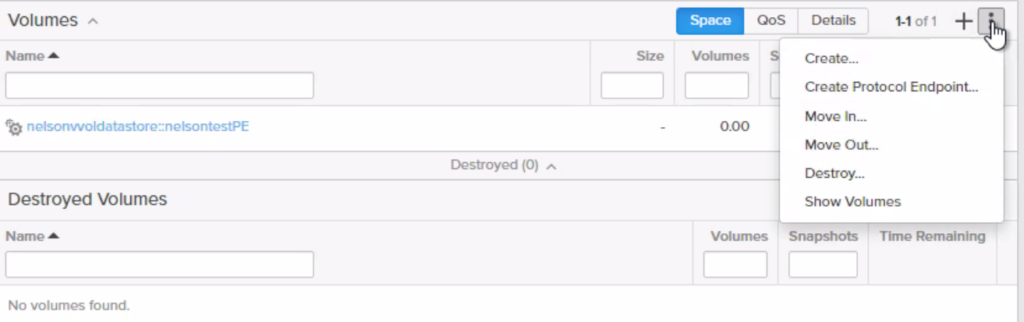

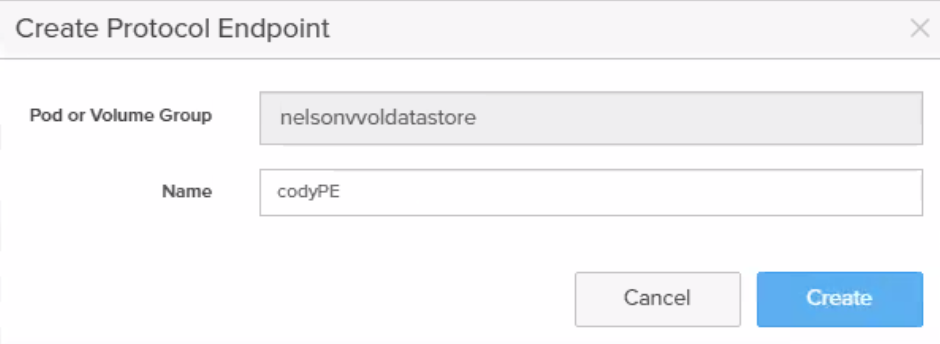

Next step is to make it a vVol storage container. So what differentiates a “normal” pod from a pod that VASA sees as a vVol datastore? A protocol endpoint–this is what provides the datapath to vVols. If a pod has a PE in it–it is available as a storage container (which means it can be mounted as a vVol datastore). If it doesn’t, it can’t.

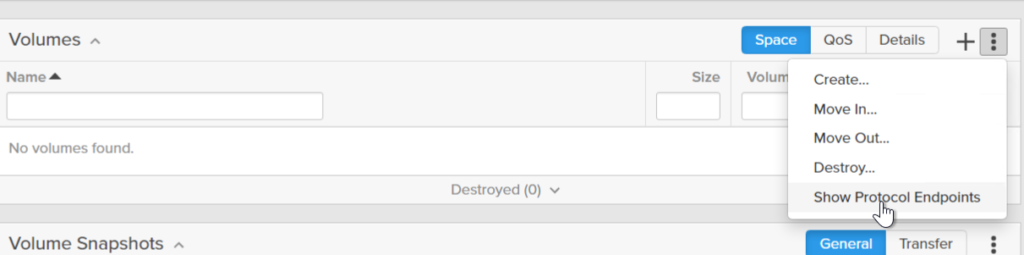

To create a PE, click on the vertical ellipsis and choose Show Protocol Endpoints which will also add the Create Protocol Endpoint option.

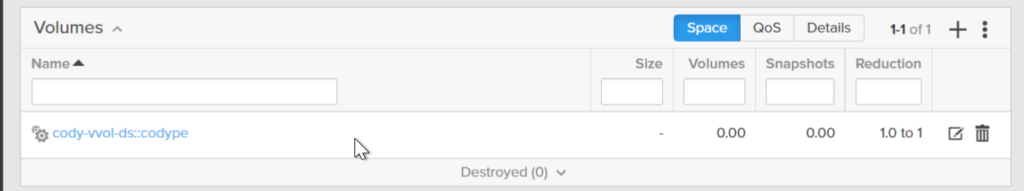

You can see these in the pods by choosing to allow them in the volume list:

Voila.

You now have a new container.

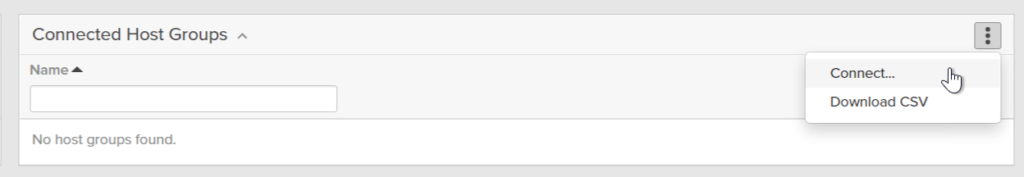

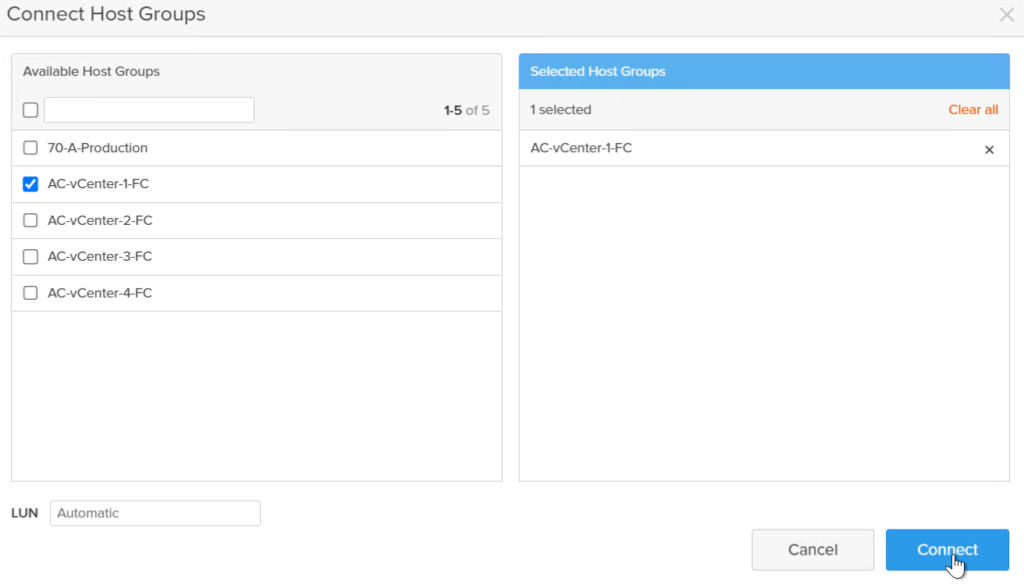

To enable a vCenter host or cluster to be able to use that container (actually have physical data path to it to provision storage) you need to connect the PE to the host group or host. Click on the PE, then the host group box and click Connect…

Side note: we are working with VMware on more sophisticated management control around storage container ACLs–stay tuned.

Go to vSphere.

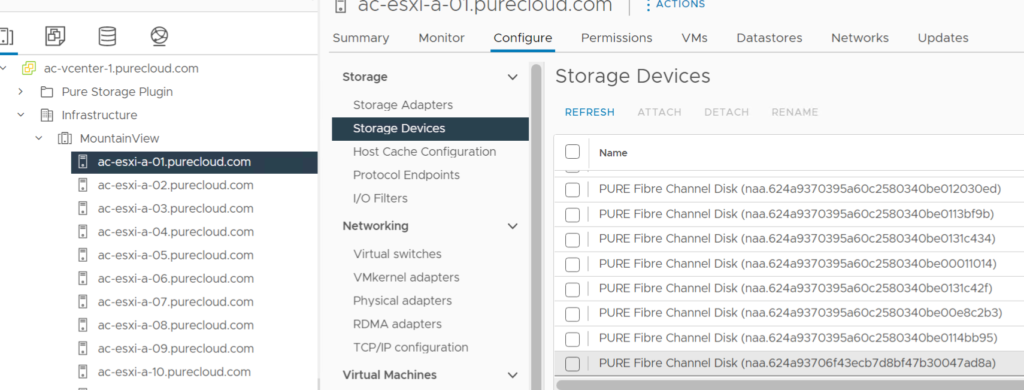

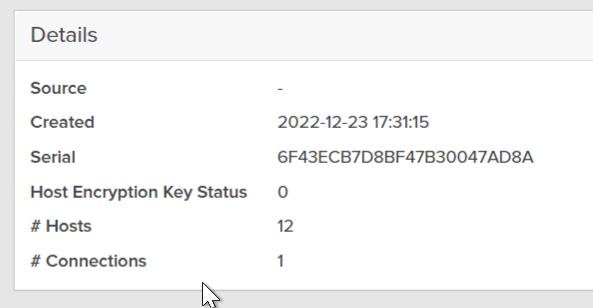

You should see the PE automatically (no rescan needed) as we alert ESXi via a SCSI unit attention to its presence. Sometimes ESXi does need a bit of a kick so you can issue a SCSI rescan if you don’t see it. You can confirm it by clicking a host, then Configure > Storage Devices. Find the serial that matches the PE.

This is indeed my PE.

Now you might ask, well why not use the Protocol Endpoint screen in the vSphere UI? Well this is kind of an eccentricity of vSphere. PEs are only listed there once they are in use, so it will not show up there yet.

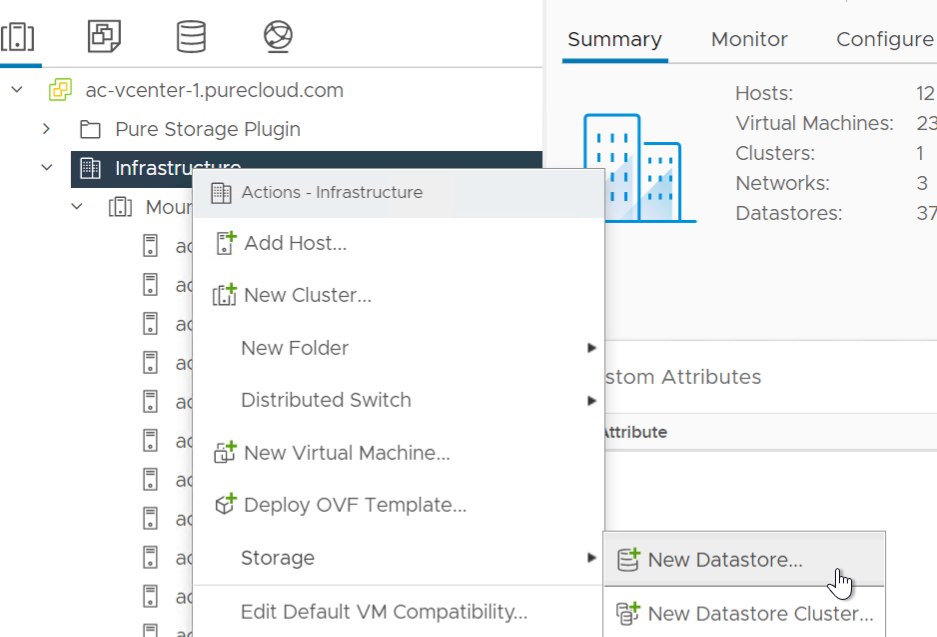

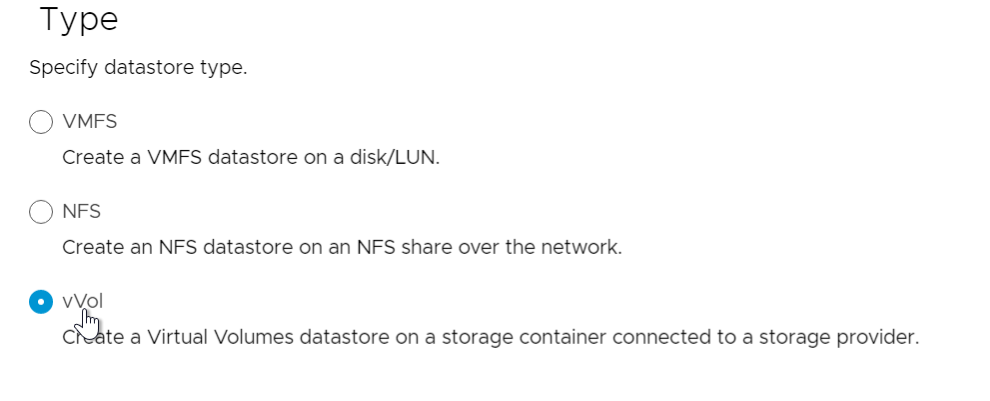

Next, go to a cluster and create a new datastore.

vVols.

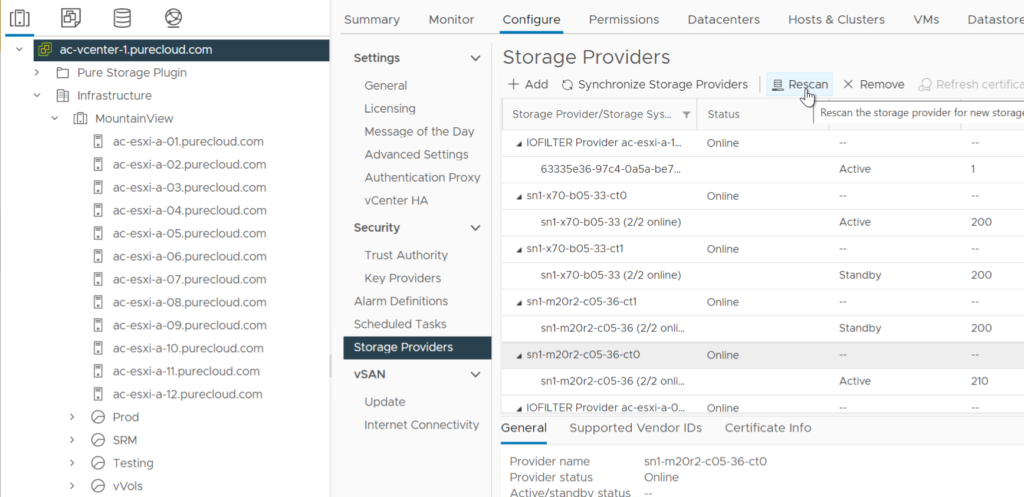

If you do not see it, in the list, go to the vCenter in the object hierarchy, Configure > Storage Providers > find the VASA provider and click the rescan button.

FYI in PowerCLI this is: get-vasaprovider -id <provider ID> -refresh

Note there is a feature to automatically alert vCenter to new containers without having to do this–this is something we will look to add in the future.

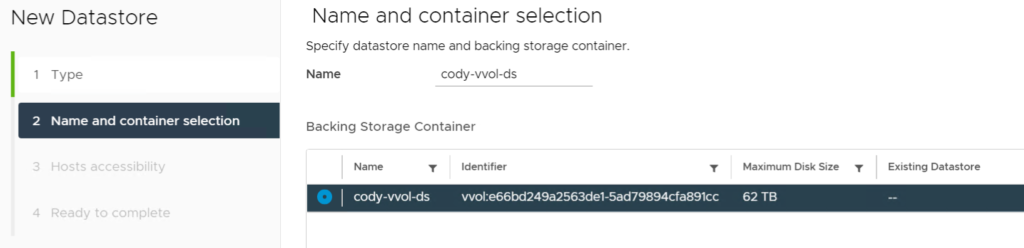

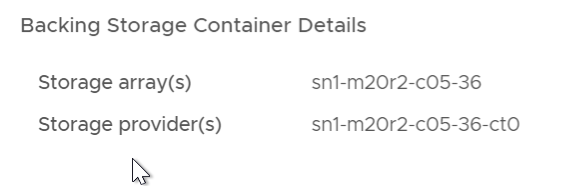

Select the container. The container will have the pod name and below in the bottom of the window will confirm the array it is from.

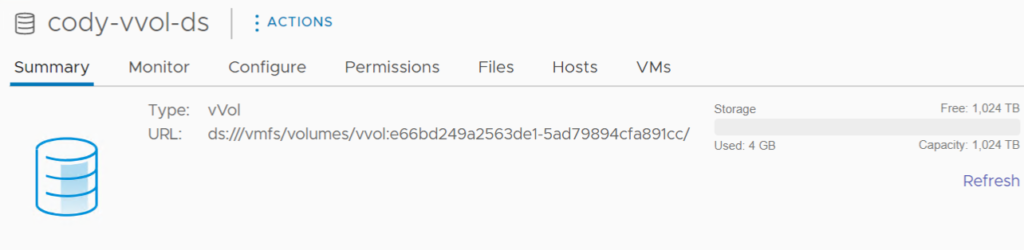

Name the datastore whatever you want–it does not have to be the same but probably makes sense to do so. Your call. If you are curious about the Identifier. That is actually from the pod UUID in a slightly different format (adds vvol: to the start and removes 3 of the 4 dashes that Purity uses).

Aka:

vvol:e66bd249a2563de1-5ad79894cfa891cc

from

e66bd249-a256-3de1-5ad7-9894cfa891cc

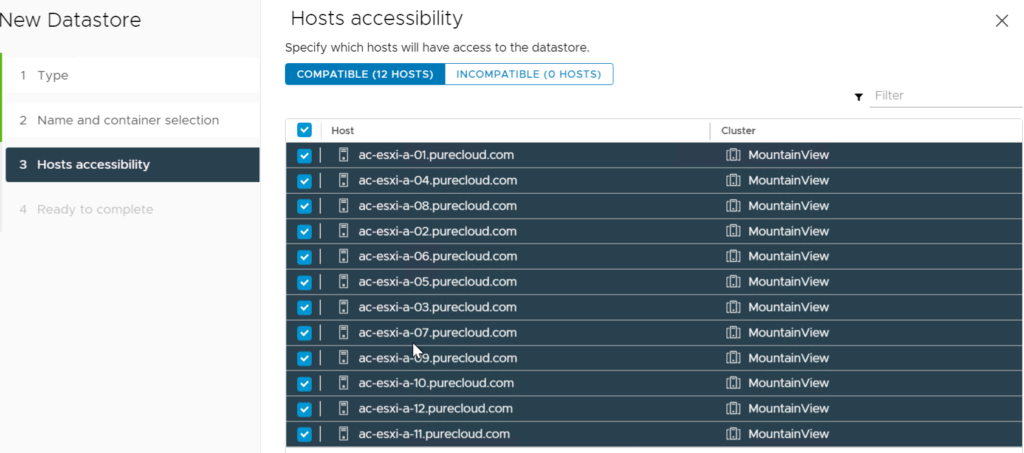

Click Next and choose the hosts (ideally all in the cluster) and Next again.

Done. The container is mounted:

The default capacity is 1 PB, lower than the previous which was 8. We did this for a few reasons: multiple containers likely mean the capacity is spread out across them, 8 was also almost ludicrously large, and there were some bugs in vSphere when a given datastore was larger than 2 PB – 1 byte. So 1 seemed like a good place to start.

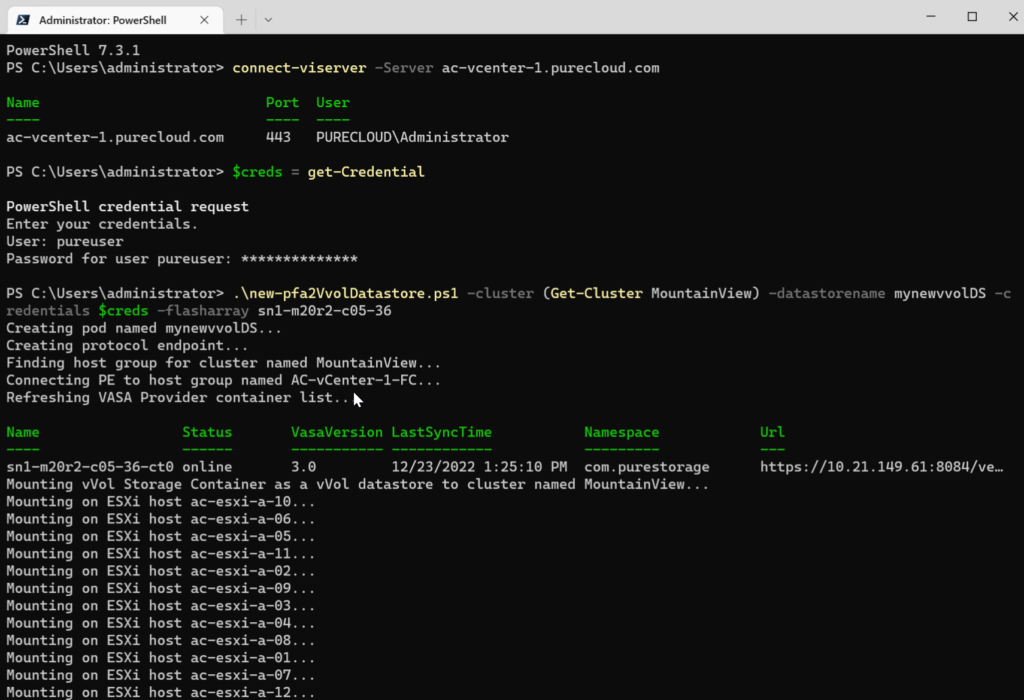

I also wrote a quick and dirty PowerShell script found here:

.\new-pfa2VvolDatastore.ps1 -cluster (Get-Cluster <cluster name>) -datastorename <name> -credentials (get-credential) -flasharray <array address>

So what’s next? A few things are coming quite soon:

-vSphere Plugin support for this feature

-Capacity control of pods (aka vVol datastores).

Capacity control did not quite make this release but it will be out very soon. We are timing the plugin support for that feature release. Capacity control of pods/datastores actually required quite a bit of discussion/design/thought. What is being reported? What is limited? Provisioned? Written? Reduced? Etc. Keep an eye on that soon.

Great great great 🙂

So can we create multiple PE’s and vVol’s from a single flash array and assign them all to the same vSphere environment? I would like to have separate vVol datastores in vSphere so that we can assign permissions based on department so that each department does not interfere with the other’s vVol data/VM’s.