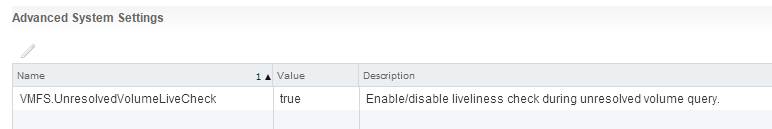

This is part 1 of this 7 part series. Questions around managing VMFS snapshots have been cropping up a lot lately and I realized I didn’t have a lot of specific Pure Storage and VMware resignaturing information out there. Especially around scripting all of this and the various options to do this. So I put a long series out here about how to do all of this. Let’s start with what an unresolved VMFS is and how to mount it.

The series being:

- Mounting an unresolved VMFS

- Why not force mount?

- Why might a VMFS resignature operation fail?

- How to correlate a VMFS and a FlashArray volume

- How to snapshot a VMFS on the FlashArray

- How to mount a VMFS FlashArray snapshot

- Restoring a single VM from a FlashArray snapshot

Continue reading “VMFS Snapshots and the FlashArray Part I: Mounting an unresolved VMFS”